This week my colleague Dieter Vanderelst presented our paper: “The Dark Side of Ethical Robots” at AIES 2018 in New Orleans.

This week my colleague Dieter Vanderelst presented our paper: “The Dark Side of Ethical Robots” at AIES 2018 in New Orleans.

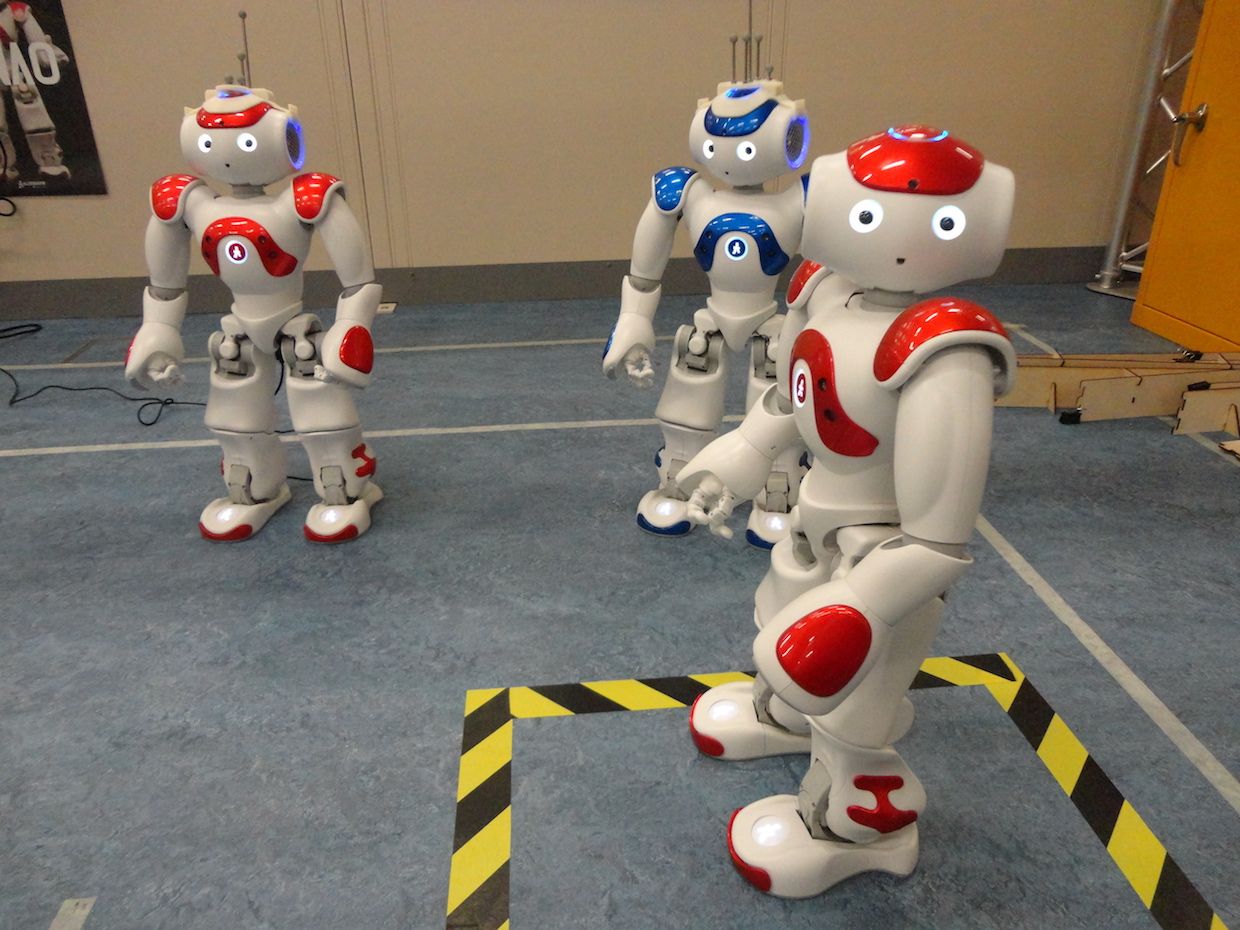

I blogged about Dieter’s very elegant experiment here, but let me summarize. With two NAO robots he set up a demonstration of an ethical robot helping another robot acting as a proxy human, then showed that with a very simple alteration of the ethical robot’s logic it is transformed into a distinctly unethical robot—behaving either competitively or aggressively toward the proxy human.

Here are our paper’s key conclusions:

The ease of transformation from ethical to unethical robot is hardly surprising. It is a straightforward consequence of the fact that both ethical and unethical behaviors require the same cognitive machinery with—in our implementation—only a subtle difference in the way a single value is calculated. In fact, the difference between an ethical (i.e. seeking the most desirable outcomes for the human) robot and an aggressive (i.e. seeking the least desirable outcomes for the human) robot is a simple negation of this value.

Let us examine the risks associated with ethical robots and if, and how, they might be mitigated. There are three.

- First there is the risk that an unscrupulous manufacturer

- Perhaps more serious is the risk arising from robots that have user adjustable ethics settings.

- But even hard-coded ethics would not guard against undoubtedly the most serious risk of all, which arises when those ethical rules are vulnerable to malicious hacking.

It is very clear that guaranteeing the security of ethical robots is beyond the scope of engineering and will need regulatory and legislative efforts.

Considering the ethical, legal and societal implications of robots, it becomes obvious that robots themselves are not where responsibility lies. Robots are simply smart machines of various kinds and the responsibility to ensure they behave well must always lie with human beings. In other words, we require ethical governance, and this is equally true for robots with or without explicit ethical behaviors.

Two years ago I thought the benefits of ethical robots outweighed the risks. Now I’m not so sure.

I now believe that – even with strong ethical governance—the risks that a robot’s ethics might be compromised by unscrupulous actors are so great as to raise very serious doubts over the wisdom of embedding ethical decision making in real-world safety critical robots, such as driverless cars. Ethical robots might not be such a good idea after all.

Thus, even though we’re calling into question the wisdom of explicitly ethical robots, that doesn’t change the fact that we absolutely must design all robots to minimize the likelihood of ethical harms, in other words we should be designing implicitly ethical robots within Moor’s schema.

Source: IEEE