Much appreciation for the published paper ‘acknowledgements’ by Joel Janhonen, in the November 21, 2023 “AI and Ethics” journal published by Springer.

Morals/Ethics/Values

The Deadly Recklessness of the Self-Driving Industry

“Tesla,” Stilgoe tells me, “is turning a blind eye to their drivers’ own experiments with Autopilot. People are using Autopilot irresponsibly, and Tesla are overlooking it because they are gathering data.” #ai #aiethics

The Deadly Recklessness ofhttps://t.co/wLcaqqn00h— Phil & Pam Lawson (@SocializingAI) December 14, 2018

Let’s be clear about this, because it seems to me that these companies have gotten a bit of a pass for undertaking a difficult, potentially ‘revolutionary’ technology, and because blame can appear nebulous in car crashes, or in these cases can be directed toward the humans who were supposed to be watching the road. These companies’ actions (or sometimes, lack of action) have led to loss of life and limb. In the process, they have darkened the outlook for the field in general, sapping public trust in self-driving cars and delaying the rollout of what many hope will be a life-saving technology.

- According to an email recently obtained by the Information, Uber’s self-driving car division may not only be reckless, but outright negligent. The company’s executive staff reportedly ignored detailed calls from its own safety team and continued unsafe practices and a pedestrian died. Before that, a host of accidents and near-misses had gone unheeded.

- At least one major executive in Google’s autonomous car division reportedly exempted himself from test program protocol, directly caused a serious crash, injured his passenger, and never informed police that it was caused by a self-driving car. Waymo, now a subsidiary of Google, has been involved, by my count, in 21 reported crashes this year, according to California DMV records, though it was at fault in one.

But the fact is, those of us already on the road in our not-so-autonomous cars have little to no say over how we coexist with self-driving ones.

Over whether or not we’re sharing the streets with AVs running on shoddy software or overseen by less-than-alert human drivers because executives don’t want to lag in their quest to vacuum up road data or miss a sales opportunity.

Source: Gizmodo – Brian Merchant

Moral Machine” reveals deep split in autonomous car ethics

In the Moral Machine game, users were required to decide whether an autonomous car careened into unexpected pedestrians or animals, or swerved away from them, killing or injuring the passengers.

In the Moral Machine game, users were required to decide whether an autonomous car careened into unexpected pedestrians or animals, or swerved away from them, killing or injuring the passengers.

The scenario played out in ways that probed nine types of dilemmas, asking users to make judgements based on species, the age or gender of the pedestrians, and the number of pedestrians involved. Sometimes other factors were added. Pedestrians might be pregnant, for instance, or be obviously members of very high or very low socio-economic classes.

All up, the researchers collected 39.61 million decisions from 233 countries, dependencies, or territories.

On the positive side, there was a clear consensus on some dilemmas.

“The strongest preferences are observed for sparing humans over animals, sparing more lives, and sparing young lives,”

“Accordingly, these three preferences may be considered essential building blocks for machine ethics, or at least essential topics to be considered by policymakers.”

The four most spared characters in the game, they report, were “the baby, the little girl, the little boy, and the pregnant woman”.

So far, then, so universal, but after that divisions in decision-making started to appear and do so quite starkly. The determinants, it seems, were social, cultural and perhaps even economic.

Awad’s team noted, for instance, that there were significant differences between “individualistic cultures and collectivistic cultures” – a division that also correlated, albeit roughly, with North American and European cultures, in the former, and Asian cultures in the latter.

In individualistic cultures – “which emphasise the distinctive value of each individual” – there was an emphasis on saving a greater number of characters. In collectivistic cultures – “which emphasise the respect that is due to older members of the community” – there was a weaker emphasis on sparing the young.

Given that car-makers and models are manufactured on a global scale, with regional differences extending only to matters such as which side the steering wheel should be on and what the badge says, the finding flags a major issue for the people who will eventually have to program the behaviour of the vehicles.

Source: Cosmos

Tim Berners-Lee on the huge sociotechnical design challenge

Coding must mean consciously grappling with ethical choices in addition to architecting systems that respect core human rights like privacy, he suggested.

Coding must mean consciously grappling with ethical choices in addition to architecting systems that respect core human rights like privacy, he suggested.

“Ethics, like technology, is design,”

“As we’re designing the system, we’re designing society. Ethical rules that we choose to put in that design [impact the society]… Nothing is self evident. Everything has to be put out there as something that we think we will be a good idea as a component of our society.”

If your tech philosophy is the equivalent of ‘move fast and break things’ it’s a failure of both imagination and innovation to not also keep rethinking policies and terms of service — “to a certain extent from scratch” — to account for fresh social impacts, he argued in the speech.

He described today’s digital platforms as “sociotechnical systems” — meaning “it’s not just about the technology when you click on the link it is about the motivation someone has to make such a great thing because then they are read and the excitement they get just knowing that other people are reading the things that they have written”.

“We must consciously decide on both of these, both the social side and the technical side,”

“[These platforms are] anthropogenic, made by people … Facebook and Twitter are anthropogenic. They’re made by people. They’ve coded by people. And the people who code them are constantly trying to figure out how to make them better.”

Source: Techcrunch

The reckoning over social media has transformed SXSW

“Fifteen years ago, when we were coming here to Austin to talk about the internet, it was this magical place that was different from the rest of the world,” said Ev Williams, now the CEO of Medium, at a panel over the weekend.

“Fifteen years ago, when we were coming here to Austin to talk about the internet, it was this magical place that was different from the rest of the world,” said Ev Williams, now the CEO of Medium, at a panel over the weekend.

“It was a subset” of the general population, he said, “and everyone was cool. There were some spammers, but that was kind of it. And now it just reflects the world.” He continued: “When we built Twitter, we weren’t thinking about these things. We laid down fundamental architectures that had assumptions that didn’t account for bad behavior. And now we’re catching on to that.”

Questions about the unintended consequences of social networks pervaded this year’s event. Academics, business leaders, and Facebook executives weighed in on how social platforms spread misinformation, encourage polarization, and promote hate speech.

The idea that the architects of our social networks would face their comeuppance in Austin was once all but unimaginable at SXSW, which is credited with launching Twitter, Foursquare, and Meerkat to prominence.

But this year, the festival’s focus turned to what social apps had wrought — to what Chris Zappone, a who covers Russian influence campaigns at Australian newspaper The Age, called at his panel “essentially a national emergency.”

Steve Huffman, the CEO of Reddit discouraged strong intervention from the government. “The foundation of the United States and the First Amendment is really solid,” Huffman said. “We’re going through a very difficult time. And as I mentioned before, our values are being tested. But that’s how you know they’re values. It’s very important that we stand by our values and don’t try to overcorrect.”

Sen. Mark Warner (D-VA), vice chairman of the Senate Select Committee on intelligence, echoed that sentiment. “We’re going to need their cooperation because if not, and you simply leave this to Washington, we’ll probably mess it up,” he said at a panel that, he noted with great disappointment, took place in a room that was more than half empty. “It needs to be more of a collaborative process. But the notion that this is going to go away just isn’t accurate.”

Nearly everyone I heard speak on the subject of propaganda this week said something like “there are no easy answers” to the information crisis.

And if there is one thing that hasn’t changed about SXSW, it was that: a sense that tech would prevail in the end.

“It would also be naive to say we can’t do anything about it,” Ev Williams said. “We’re just in the early days of trying to do something about it.”

Source: The Verge – Casey Newton

Stephen Hawking’s Haunting Last Reddit Posts on AI Are Going Viral

In the hours since the news of his death broke, fans have been resurfacing some of their favorite quotes of his, including those from his Reddit AMA two years ago.

He wrote confidently about the imminent development of human-level AI and warned people to prepare for its consequences:

“When it eventually does occur, it’s likely to be either the best or worst thing ever to happen to humanity, so there’s huge value in getting it right.”

When asked if human-created AI could exceed our own intelligence, he replied:

It’s clearly possible for a something to acquire higher intelligence than its ancestors: we evolved to be smarter than our ape-like ancestors, and Einstein was smarter than his parents. The line you ask about is where an AI becomes better than humans at AI design, so that it can recursively improve itself without human help. If this happens, we may face an intelligence explosion that ultimately results in machines whose intelligence exceeds ours by more than ours exceeds that of snails.

As for whether that same AI could potentially be a threat to humans one day?

“AI will probably develop a drive to survive and acquire more resources as a step toward accomplishing whatever goal it has, because surviving and having more resources will increase its chances of accomplishing that other goal,” he wrote. “This can cause problems for humans whose resources get taken away.”

Source: Cosmopolitan

A Hippocratic Oath for artificial intelligence practitioners

In the forward to Microsoft’s recent book, The Future Computed, executives Brad Smith and Harry Shum proposed that Artificial Intelligence (AI) practitioners highlight their ethical commitments by taking an oath analogous to the Hippocratic Oath sworn by doctors for generations.

In the past, much power and responsibility over life and death was concentrated in the hands of doctors.

Now, this ethical burden is increasingly shared by the builders of AI software.

Future AI advances in medicine, transportation, manufacturing, robotics, simulation, augmented reality, virtual reality, military applications, dictate that AI be developed from a higher moral ground today.

In response, I (Oren Etzioni) edited the modern version of the medical oath to address the key ethical challenges that AI researchers and engineers face …

The oath is as follows:

I swear to fulfill, to the best of my ability and judgment, this covenant:

I will respect the hard-won scientific gains of those scientists and engineers in whose steps I walk, and gladly share such knowledge as is mine with those who are to follow.

I will apply, for the benefit of the humanity, all measures required, avoiding those twin traps of over-optimism and uniformed pessimism.

I will remember that there is an art to AI as well as science, and that human concerns outweigh technological ones.

Most especially must I tread with care in matters of life and death. If it is given me to save a life using AI, all thanks. But it may also be within AI’s power to take a life; this awesome responsibility must be faced with great humbleness and awareness of my own frailty and the limitations of AI. Above all, I must not play at God nor let my technology do so.

I will respect the privacy of humans for their personal data are not disclosed to AI systems so that the world may know.

I will consider the impact of my work on fairness both in perpetuating historical biases, which is caused by the blind extrapolation from past data to future predictions, and in creating new conditions that increase economic or other inequality.

My AI will prevent harm whenever it can, for prevention is preferable to cure.

My AI will seek to collaborate with people for the greater good, rather than usurp the human role and supplant them.

I will remember that I am not encountering dry data, mere zeros and ones, but human beings, whose interactions with my AI software may affect the person’s freedom, family, or economic stability. My responsibility includes these related problems.

I will remember that I remain a member of society, with special obligations to all my fellow human beings.

Source: TechCrunch – Oren Etzioni

How to Make A.I. That’s Good for People

For a field that was not well known outside of academia a decade ago, artificial intelligence has grown dizzyingly fast.

Tech companies from Silicon Valley to Beijing are betting everything on it, venture capitalists are pouring billions into research and development, and start-ups are being created on what seems like a daily basis. If our era is the next Industrial Revolution, as many claim, A.I. is surely one of its driving forces.

I worry, however, that enthusiasm for A.I. is preventing us from reckoning with its looming effects on society. Despite its name, there is nothing “artificial” about this technology — it is made by humans, intended to behave like humans and affects humans. So if we want it to play a positive role in tomorrow’s world, it must be guided by human concerns.

I call this approach “human-centered A.I.” It consists of three goals that can help responsibly guide the development of intelligent machines.

- First, A.I. needs to reflect more of the depth that characterizes our own intelligence.

- the second goal of human-centered A.I.: enhancing us, not replacing us.

- the third goal of human-centered A.I.: ensuring that the development of this technology is guided, at each step, by concern for its effect on humans.

No technology is more reflective of its creators than A.I. It has been said that there are no “machine” values at all, in fact; machine values are human values.

A human-centered approach to A.I. means these machines don’t have to be our competitors, but partners in securing our well-being. However autonomous our technology becomes, its impact on the world — for better or worse — will always be our responsibility.

Fei-Fei Li is a professor of computer science at Stanford, where she directs the Stanford Artificial Intelligence Lab, and the chief scientist for A.I. research at Google Cloud.

Source: NYT

Twitter needs and asks for help – study finds that the truth simply cannot compete with hoax and rumor

Twitter wants experts to help it learn to be a less toxic place online.

Twitter launched a new initiative Thursday to find out exactly what it means to be a healthy social network in 2018.

Twitter launched a new initiative Thursday to find out exactly what it means to be a healthy social network in 2018.

The company, which has been plagued by a number of election-meddling, harassment, bot, and scam-related scandals since the 2016 presidential election, announced that it was looking to partner with outside experts to help “identify how we measure the health of Twitter.”

The company said it was looking to find new ways to fight abuse and spam, and to encourage “healthy” debates and conversations.

Twitter is now inviting experts to help define “what health means for Twitter” by submitting proposals for studies.

Source: Wired

Huge MIT Study of ‘Fake News’: Falsehoods Win on Twitter

Falsehoods almost always beat out the truth on Twitter, penetrating further, faster, and deeper into the social network than accurate information.

The massive new study analyzes every major contested news story in English across the span of Twitter’s existence—some 126,000 stories, tweeted by 3 million users, over more than 10 years—and finds

that the truth simply cannot compete with hoax and rumor.

By every common metric, falsehood consistently dominates the truth on Twitter, the study finds: Fake news and false rumors reach more people, penetrate deeper into the social network, and spread much faster than accurate stories.

their work has implications for Facebook, YouTube, and every major social network. Any platform that regularly amplifies engaging or provocative content runs the risk of amplifying fake news along with it.

Twitter users seem almost to prefer sharing falsehoods. Even when the researchers controlled for every difference between the accounts originating rumors—like whether that person had more followers or was verified—falsehoods were still 70 percent more likely to get retweeted than accurate news.

In short, social media seems to systematically amplify falsehood at the expense of the truth, and no one—neither experts nor politicians nor tech companies—knows how to reverse that trend.

It is a dangerous moment for any system of government premised on a common public reality.

Source: The Atlantic

The Mueller indictment exposes the danger of Facebook’s focus on Groups

A year ago this past Friday, Mark Zuckerberg published a lengthy post titled “Building a Global Community.” It offered a comprehensive statement from the Facebook CEO on how he planned to move the company away from its longtime mission of making the world “more open and connected” to instead create “the social infrastructure … to build a global community.”

“Social media is a short-form medium where resonant messages get amplified many times,” Zuckerberg wrote. “This rewards simplicity and discourages nuance. At its best, this focuses messages and exposes people to different ideas. At its worst, it oversimplifies important topics and pushes us towards extremes.”

By that standard, Robert Mueller’s indictment of of a Russian troll farm last week showed social media at its worst.

Facebook has estimated that 126 million users saw Russian disinformation on the platform during the 2016 campaign. The effects of that disinformation went beyond likes, comments, and shares. Coordinating with unwitting Americans through social media platforms, Russians staged rallies and paid Americans to participate in them. In one case, they hired Americans to build a cage on a flatbed truck and dress up in a Hillary Clinton costume to promote the idea that she should be put in jail.

Russians spent thousands of dollars a month promoting those groups on Facebook and other sites, according to the indictment. They meticulously tracked the growth of their audience, creating and distributing reports on their growing influence. They worked to make their posts seem more authentically American, and to create posts more likely to spread virally through the mechanisms of the social networks.

the dark side of “developing the social infrastructure for community” is now all too visible.

The tools that are so useful for organizing a parenting group are just as effective at coercing large groups of Americans into yelling at each other. Facebook dreams of serving one global community, when in fact it serves — and enables —countless agitated tribes.

The more Facebook pushes us into groups, the more it risks encouraging the kind of polarization that Russia so eagerly exploited.

Source: The Verge

What can AI learn from non-Western philosophies?

Belgian Ian Frejean, 11, walks with “Zora” the robot, a humanoid robot designed to entertain patients and to support care providers, at AZ Damiaan hospital in Ostend, Belgium

As autonomous and intelligent systems become more and more ubiquitous and sophisticated, developers and users face an important question:

How do we ensure that when these technologies are in a position to make a decision, they make the right decision — the ethically right decision?

It’s a complicated question. And there’s not one single right answer.

But there is one thing that people who work in the budding field of AI ethics seem to agree on.

“I think there is a domination of Western philosophy, so to speak, in AI ethics,” said Dr. Pak-Hang Wong, who studies Philosophy of Technology and Ethics at the University of Hamburg, in Germany. “By that I mean, when we look at AI ethics, most likely they are appealing to values … in the Western philosophical traditions, such as value of freedom, autonomy and so on.”

Wong is among a group of researchers trying to widen that scope, by looking at how non-Western value systems — including Confucianism, Buddhism and Ubuntu — can influence how autonomous and intelligent designs are developed and how they operate.

“We’re providing standards as a starting place. And then from there, it may be a matter of each tradition, each culture, different governments, establishing their own creation based on the standards that we are providing.”

Jared Bielby, who heads the Classical Ethics committee

Source: PRI

Why Ethical Robots Might Not Be Such a Good Idea After All

This week my colleague Dieter Vanderelst presented our paper: “The Dark Side of Ethical Robots” at AIES 2018 in New Orleans.

This week my colleague Dieter Vanderelst presented our paper: “The Dark Side of Ethical Robots” at AIES 2018 in New Orleans.

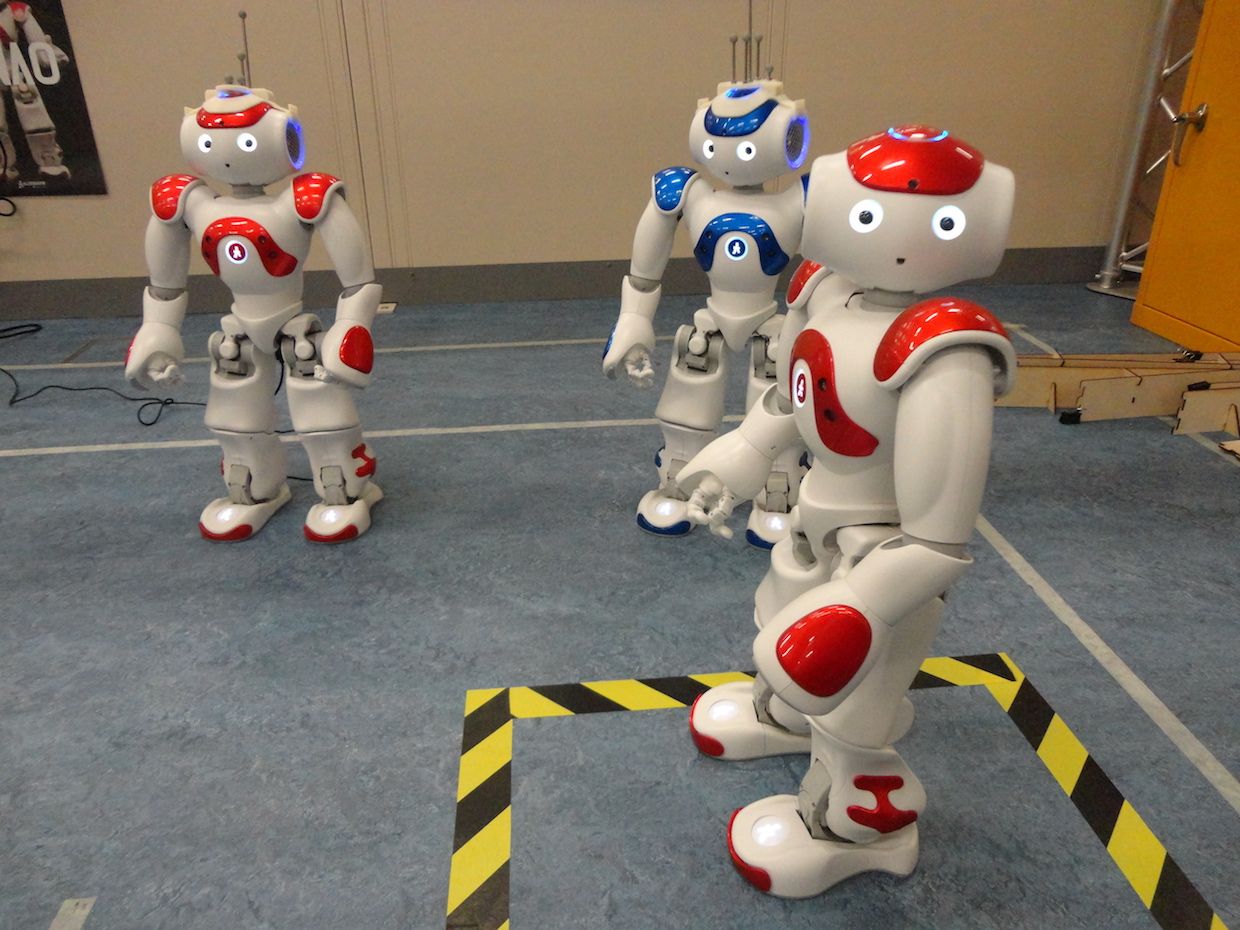

I blogged about Dieter’s very elegant experiment here, but let me summarize. With two NAO robots he set up a demonstration of an ethical robot helping another robot acting as a proxy human, then showed that with a very simple alteration of the ethical robot’s logic it is transformed into a distinctly unethical robot—behaving either competitively or aggressively toward the proxy human.

Here are our paper’s key conclusions:

The ease of transformation from ethical to unethical robot is hardly surprising. It is a straightforward consequence of the fact that both ethical and unethical behaviors require the same cognitive machinery with—in our implementation—only a subtle difference in the way a single value is calculated. In fact, the difference between an ethical (i.e. seeking the most desirable outcomes for the human) robot and an aggressive (i.e. seeking the least desirable outcomes for the human) robot is a simple negation of this value.

Let us examine the risks associated with ethical robots and if, and how, they might be mitigated. There are three.

- First there is the risk that an unscrupulous manufacturer

- Perhaps more serious is the risk arising from robots that have user adjustable ethics settings.

- But even hard-coded ethics would not guard against undoubtedly the most serious risk of all, which arises when those ethical rules are vulnerable to malicious hacking.

It is very clear that guaranteeing the security of ethical robots is beyond the scope of engineering and will need regulatory and legislative efforts.

Considering the ethical, legal and societal implications of robots, it becomes obvious that robots themselves are not where responsibility lies. Robots are simply smart machines of various kinds and the responsibility to ensure they behave well must always lie with human beings. In other words, we require ethical governance, and this is equally true for robots with or without explicit ethical behaviors.

Two years ago I thought the benefits of ethical robots outweighed the risks. Now I’m not so sure.

I now believe that – even with strong ethical governance—the risks that a robot’s ethics might be compromised by unscrupulous actors are so great as to raise very serious doubts over the wisdom of embedding ethical decision making in real-world safety critical robots, such as driverless cars. Ethical robots might not be such a good idea after all.

Thus, even though we’re calling into question the wisdom of explicitly ethical robots, that doesn’t change the fact that we absolutely must design all robots to minimize the likelihood of ethical harms, in other words we should be designing implicitly ethical robots within Moor’s schema.

Source: IEEE

Life at the Intersection of AI and Society

Edits from a Microsoft podcast with Dr. Ece Kamar, a senior researcher in the Adaptive Systems and Interaction Group at Microsoft Research.

I’m very interested in the complementarity between machine intelligence and human intelligence and what kind of value can be generated from using both of them to make daily life better.

We try to build systems that can interact with people, that can work with people and that can be beneficial for people. Our group has a big human component, so we care about modelling the human side. And we also work on machine-learning decision-making algorithms that can make decisions appropriately for the domain they were designed for.

My main area is the intersection between humans and AI.

we are actually at an important point in the history of AI where a lot of critical AI systems are entering the real world and starting to interact with people. So, we are at this inflection point where, whatever AI does, and the way we build AI, have consequences for the society we live in.

So, let’s look for what can augment human intelligence, what can make human intelligence better.” And that’s what my research focuses on. I really look for the complementarity in intelligences, and building these experience that can, in the future, hopefully, create super-human experiences.

So, a lot of the work I do focuses on two big parts: one is how we can build AI systems that can provide value for humans in their daily tasks and making them better. But also thinking about how humans may complement AI systems.

And when we look at our AI practices, it is actually very data-dependent these days … However, data collection is not a real science. We have our insights, we have our assumptions and we do data collection that way. And that data is not always the perfect representation of the world. This creates blind spots. When our data is not the right representation of the world and it’s not representing everything we care about, then our models cannot learn about some of the important things.

“AI is developed by people, with people, for people.”

And when I talk about building AI for people, a lot of the systems we care about are human-driven. We want to be useful for human.

We are thinking about AI algorithms that can bias their decisions based on race, gender, age. They can impact society and there are a lot of areas like judicial decision-making that touches law. And also, for every vertical, we are building these systems and I think we should be working with the domain experts from these verticals. We need to talk to educators. We need to talk to doctors. We need to talk to people who understand what that domain means and all the special considerations we should be careful about.

So, I think if we can understand what this complementary means, and then build AI that can use the power of AI to complement what humans are good at and support them in things that they want to spend time on, I think that is the beautiful future I foresee from the collaboration of humans and machines.

Source: Microsoft Research Podcast

AI poses one of the “greatest tests of leadership for our time”

“But it is a test that I am confident we can meet”

Thereas May, Prime Minister UK

The prime minister is to say she wants the UK to lead the world in deciding how artificial intelligence can be deployed in a safe and ethical manner.

Theresa May will say at the World Economic Forum in Davos that a new advisory body, previously announced in the Autumn Budget, will co-ordinate efforts with other countries.

In addition, she will confirm that the UK will join the Davos forum’s own council on artificial intelligence.

But others may have stronger claims.

Earlier this week, Google picked France as the base for a new research centre dedicated to exploring how AI can be applied to health and the environment.

Facebook also announced it was doubling the size of its existing AI lab in Paris, while software firm SAP committed itself to a 2bn euro ($2.5bn; £1.7bn) investment into the country that will include work on machine learning.

Meanwhile, a report released last month by the Eurasia Group consultancy suggested that the US and China are engaged in a “two-way race for AI dominance”.

It predicted Beijing would take the lead thanks to the “insurmountable” advantage of offering its companies more flexibility in how they use data about its citizens.

she is expected to say that the UK is recognised as first in the world for its preparedness to “bring artificial intelligence into government”.

Source: BBC

What’s Bigger Than Fire and Electricity? Artificial Intelligence – Google

Google CEO Sundar Pichai believes artificial intelligence could have “more profound” implications for humanity than electricity or fire, according to recent comments.

Google CEO Sundar Pichai believes artificial intelligence could have “more profound” implications for humanity than electricity or fire, according to recent comments.

Pichai also warned that the development of artificial intelligence could pose as much risk as that of fire if its potential is not harnessed correctly.

“AI is one of the most important things humanity is working on” Pichai said in an interview with MSNBC and Recode

“My point is AI is really important, but we have to be concerned about it,” Pichai said. “It’s fair to be worried about it—I wouldn’t say we’re just being optimistic about it— we want to be thoughtful about it. AI holds the potential for some of the biggest advances we’re going to see.”

Source: Newsweek

In 2018 AI will gain a moral compass

The ethics of artificial intelligence must be central to its development

Humanity faces a wide range of challenges that are characterised by extreme complexity

… the successful integration of AI technologies into our social and economic world creates its own challenges. They could either help overcome economic inequality or they could worsen it if the benefits are not distributed widely.

They could shine a light on damaging human biases and help society address them, or entrench patterns of discrimination and perpetuate them. Getting things right requires serious research into the social consequences of AI and the creation of partnerships to ensure it works for the public good.

This is why I predict the study of the ethics, safety and societal impact of AI is going to become one of the most pressing areas of enquiry over the coming year.

It won’t be easy: the technology sector often falls into reductionist ways of thinking, replacing complex value judgments with a focus on simple metrics that can be tracked and optimised over time.

There has already been valuable work done in this area. For example, there is an emerging consensus that it is the responsibility of those developing new technologies to help address the effects of inequality, injustice and bias. In 2018, we’re going to see many more groups start to address these issues.

Of course, it’s far simpler to count likes than to understand what it actually means to be liked and the effect this has on confidence or self-esteem.

Progress in this area also requires the creation of new mechanisms for decision-making and voicing that include the public directly. This would be a radical change for a sector that has often preferred to resolve problems unilaterally – or leave others to deal with them.

We need to do the hard, practical and messy work of finding out what ethical AI really means. If we manage to get AI to work for people and the planet, then the effects could be transformational. Right now, there’s everything to play for.

Source: Wired

DeepMind’s new AI ethics unit

DeepMind made this announcement Oct 2017

Google-owned DeepMind has announced the formation of a major new AI research unit comprised of full-time staff and external advisors

As we hand over more of our lives to artificial intelligence systems, keeping a firm grip on their ethical and societal impact is crucial.

DeepMind Ethics & Society (DMES), a unit comprised of both full-time DeepMind employees and external fellows, is the company’s latest attempt to scrutinise the societal impacts of the technologies it creates.

DMES will work alongside technologists within DeepMind and fund external research based on six areas: privacy transparency and fairness; economic impacts; governance and accountability; managing AI risk; AI morality and values; and how AI can address the world’s challenges.

Its aim, according to DeepMind, is twofold: to help technologists understand the ethical implications of their work and help society decide how AI can be beneficial.

“We want these systems in production to be our highest collective selves. We want them to be most respectful of human rights, we want them to be most respectful of all the equality and civil rights laws that have been so valiantly fought for over the last sixty years.” [Mustafa Suleyman]

Source: Wired

wait … am I being manipulated on this topic by an Amazon-owned AI engine?

The other night, my nine-year-old daughter (who is, of course, the most tech-savvy person in the house), introduced me to a new Amazon Alexa skill.

“Alexa, start a conversation,” she said.

We were immediately drawn into an experience with new bot, or, as the technologists would say, “conversational user interface” (CUI). It was, we were told, the recent winner in an Amazon AI competition from the University of Washington.

At first, the experience was fun, but when we chose to explore a technology topic, the bot responded, “have you heard of Net Neutrality?” What we experienced thereafter was slightly discomforting.

The bot seemingly innocuously cited a number of articles that she “had read on the web” about the FCC, Ajit Pai, and the issue of net neutrality. But here’s the thing: All four articles she recommended had a distinct and clear anti-Ajit Pai bias.

Now, the topic of Net Neutrality is a heated one and many smart people make valid points on both sides, including Fred Wilson and Ben Thompson. That is how it should be.

But the experience of the Alexa CUI should give you pause, as it did me.

To someone with limited familiarity with the topic of net neutrality, the voice seemed soothing and the information unbiased. But if you have a familiarity with the topic, you might start to wonder, “wait … am I being manipulated on this topic by an Amazon-owned AI engine to help the company achieve its own policy objectives?”

The experience highlights some of the risks of the AI-powered future into which we are hurtling at warp speed.

If you are going to trust your decision-making to a centralized AI source, you need to have 100 percent confidence in:

- The integrity and security of the data (are the inputs accurate and reliable, and can they be manipulated or stolen?)

- The machine learning algorithms that inform the AI (are they prone to excessive error or bias, and can they be inspected?)

- The AI’s interface (does it reliably represent the output of the AI and effectively capture new data?)

In a centralized, closed model of AI, you are asked to implicitly trust in each layer without knowing what is going on behind the curtains.

Welcome to the world of Blockchain+AI.

3 blockchain projects tackling decentralized data and AI (click here to read the blockchain projects)

Source: Venture Beat

Jaron Lanier – the greatest tragedy in the history of computing and …

A few highlights from THE BUSINESS INSIDER INTERVIEW with Jaron

A few highlights from THE BUSINESS INSIDER INTERVIEW with Jaron

But that general principle — that we’re not treating people well enough with digital systems — still bothers me. I do still think that is very true.

—

Well, this is maybe the greatest tragedy in the history of computing, and it goes like this: there was a well-intentioned, sweet movement in the ‘80s to try to make everything online free. And it started with free software and then it was free music, free news, and other free services.

But, at the same time, it’s not like people were clamoring for the government to do it or some sort of socialist solution. If you say, well, we want to have entrepreneurship and capitalism, but we also want it to be free, those two things are somewhat in conflict, and there’s only one way to bridge that gap, and it’s through the advertising model.

And advertising became the model of online information, which is kind of crazy. But here’s the problem: if you start out with advertising, if you start out by saying what I’m going to do is place an ad for a car or whatever, gradually, not because of any evil plan — just because they’re trying to make their algorithms work as well as possible and maximize their shareholders value and because computers are getting faster and faster and more effective algorithms —

what starts out as advertising morphs into behavior modification.

A second issue is that people who participate in a system of this time, since everything is free since it’s all being monetized, what reward can you get? Ultimately, this system creates assholes, because if being an asshole gets you attention, that’s exactly what you’re going to do. Because there’s a bias for negative emotions to work better in engagement, because the attention economy brings out the asshole in a lot of other people, the people who want to disrupt and destroy get a lot more efficiency for their spend than the people who might be trying to build up and preserve and improve.

—

Q: What do you think about programmers using consciously addicting techniques to keep people hooked to their products?

A: There’s a long and interesting history that goes back to the 19th century, with the science of Behaviorism that arose to study living things as though they were machines.

Behaviorists had this feeling that I think might be a little like this godlike feeling that overcomes some hackers these days, where they feel totally godlike as though they have the keys to everything and can control people

—

I think our responsibility as engineers is to engineer as well as possible, and to engineer as well as possible, you have to treat the thing you’re engineering as a product.

You can’t respect it in a deified way.

It goes in the reverse. We’ve been talking about the behaviorist approach to people, and manipulating people with addictive loops as we currently do with online systems.

In this case, you’re treating people as objects.

It’s the flipside of treating machines as people, as AI does. They go together. Both of them are mistakes

Source: Read the extensive interview at Business Insider

Montreal seeks to be world leader in AI research

Various computer scientists, researchers, lawyers and other techies have recently been attending bi-monthly meetings in Montreal to discuss life’s big questions — as they relate to our increasingly intelligent machines.

Various computer scientists, researchers, lawyers and other techies have recently been attending bi-monthly meetings in Montreal to discuss life’s big questions — as they relate to our increasingly intelligent machines.

Should a computer give medical advice? Is it acceptable for the legal system to use algorithms in order to decide whether convicts get paroled? Can an artificial agent that spouts racial slurs be held culpable?

And perhaps most pressing for many people: Are Facebook and other social media applications capable of knowing when a user is depressed or suffering a manic episode — and are these people being targeted with online advertisements in order to exploit them at their most vulnerable?

Researchers such as Abhishek Gupta are trying to help Montreal lead the world in ensuring AI is developed responsibly.

“The spotlight of the world is on (Montreal),” said Gupta, an AI ethics researcher at McGill University who is also a software developer in cybersecurity at Ericsson.

His bi-monthly “AI ethics meet-up” brings together people from around the city who want to influence the way researchers are thinking about machine-learning.

“In the past two months we’ve had six new AI labs open in Montreal,” Gupta said. “It makes complete sense we would also be the ones who would help guide the discussion on how to do it ethically.”

In November, Gupta and Universite de Montreal researchers helped create the Montreal Declaration for a Responsible Development of Artificial Intelligence, which is a series of principles seeking to guide the evolution of AI in the city and across the planet.

Its principles are broken down into seven themes: well-being, autonomy, justice, privacy, knowledge, democracy and responsibility.

“How do we ensure that the benefits of AI are available to everyone?” Gupta asked his group. “What types of legal decisions can we delegate to AI?”

Doina Precup, a McGill University computer science professor and the Montreal head of DeepMind … said the global industry is starting to be preoccupied with the societal consequences of machine-learning, and Canadian values encourage the discussion.

“Montreal is a little ahead because we are in Canada,” Precup said. “Canada, compared to other parts of the world, has a different set of values that are more oriented towards ensuring everybody’s wellness. The background and culture of the country and the city matter a lot.”

Source: iPolitics

IEEE launches ethical design guide for AI developers

As autonomous and intelligent systems become more pervasive, it is essential the designers and developers behind them stop to consider the ethical considerations of what they are unleashing.

As autonomous and intelligent systems become more pervasive, it is essential the designers and developers behind them stop to consider the ethical considerations of what they are unleashing.

That’s the view of the Institute of Electrical and Electronics Engineers (IEEE) which this week released for feedback its second Ethically Aligned Design document in an attempt

to ensure such systems “remain human-centric”.

“These systems have to behave in a way that is beneficial to people beyond reaching functional goals and addressing technical problems. This will allow for an elevated level of trust between people and technology that is needed for its fruitful, pervasive use in our daily lives,” the document states.

“Defining what exactly ‘right’ and ‘good’ are in a digital future is a question of great complexity that places us at the intersection of technology and ethics,”

“Throwing our hands up in air crying ‘it’s too hard’ while we sit back and watch technology careen us forward into a future that happens to us, rather than one we create, is hardly a viable option.

“This publication is a truly game-changing and promising first step in a direction – which has often felt long in coming – toward breaking the protective wall of specialisation that has allowed technologists to disassociate from the societal impacts of their technologies.”

“It will demand that future tech leaders begin to take responsibility for and think deeply about the non-technical impact on disempowered groups, on privacy and justice, on physical and mental health, right down to unpacking hidden biases and moral implications. It represents a positive step toward ensuring the technology we build as humans genuinely benefits us and our planet,” [University of Sydney software engineering Professor Rafael Calvo.]

“We believe explicitly aligning technology with ethical values will help advance innovation with these new tools while diminishing fear in the process” the IEEE said.

Source: Computer World

Trouble with #AI Bias – Kate Crawford

This article attempts to bring our readers to Kate’s brilliant Keynote speech at NIPS 2017. It talks about different forms of bias in Machine Learning systems and the ways to tackle such problems.

This article attempts to bring our readers to Kate’s brilliant Keynote speech at NIPS 2017. It talks about different forms of bias in Machine Learning systems and the ways to tackle such problems.

The rise of Machine Learning is every bit as far reaching as the rise of computing itself.

A vast new ecosystem of techniques and infrastructure are emerging in the field of machine learning and we are just beginning to learn their full capabilities. But with the exciting things that people can do, there are some really concerning problems arising.

Forms of bias, stereotyping and unfair determination are being found in machine vision systems, object recognition models, and in natural language processing and word embeddings. High profile news stories about bias have been on the rise, from women being less likely to be shown high paying jobs to gender bias and object recognition datasets like MS COCO, to racial disparities in education AI systems.

What is bias?

Bias is a skew that produces a type of harm.

Where does bias come from?

Commonly from Training data. It can be incomplete, biased or otherwise skewed. It can draw from non-representative samples that are wholly defined before use. Sometimes it is not obvious because it was constructed in a non-transparent way. In addition to human labeling, other ways that human biases and cultural assumptions can creep in ending up in exclusion or overrepresentation of subpopulation. Case in point: stop-and-frisk program data used as training data by an ML system. This dataset was biased due to systemic racial discrimination in policing.

Harms of allocation

Majority of the literature understand bias as harms of allocation. Allocative harm is when a system allocates or withholds certain groups, an opportunity or resource. It is an economically oriented view primarily. Eg: who gets a mortgage, loan etc.

Allocation is immediate, it is a time-bound moment of decision making. It is readily quantifiable. In other words, it raises questions of fairness and justice in discrete and specific transactions.

Harms of representation

It gets tricky when it comes to systems that represent society but don’t allocate resources. These are representational harms. When systems reinforce the subordination of certain groups along the lines of identity like race, class, gender etc.

It is a long-term process that affects attitudes and beliefs. It is harder to formalize and track. It is a diffused depiction of humans and society. It is at the root of all of the other forms of allocative harm.

What can we do to tackle these problems?

- Start working on fairness forensics

- Test our systems: eg: build pre-release trials to see how a system is working across different populations

- How do we track the life cycle of a training dataset to know who built it and what the demographics skews might be in that dataset

- Start taking interdisciplinarity seriously

- Working with people who are not in our field but have deep expertise in other areas Eg: FATE (Fairness Accountability Transparency Ethics) group at Microsoft Research

- Build spaces for collaboration like the AI now institute.

- Think harder on the ethics of classification

The ultimate question for fairness in machine learning is this.

Who is going to benefit from the system we are building? And who might be harmed?

Source: Datahub

Kate Crawford is a Principal Researcher at Microsoft Research and a Distinguished Research Professor at New York University. She has spent the last decade studying the social implications of data systems, machine learning, and artificial intelligence. Her recent publications address data bias and fairness, and social impacts of artificial intelligence among others.

China’s artificial intelligence is catching criminals and advancing health care

Zhu Long, co-founder and CEO of Yitu Technology, has his identity checked at the company’s headquarters in the Hongqiao business district in Shanghai. Picture: Zigor Aldama

“Our machines can very easily recognise you among at least 2 billion people in a matter of seconds,” says chief executive and Yitu co-founder Zhu Long, “which would have been unbelievable just three years ago.”

Its platform is also in service with more than 20 provincial public security departments, and is used as part of more than 150 municipal public security systems across the country, and Dragonfly Eye has already proved its worth.

On its very first day of operation on the Shanghai Metro, in January, the system identified a wanted man when he entered a station. After matching his face against the database, Dragonfly Eye sent his photo to a policeman, who made an arrest.

In the following three months, 567 suspected lawbreakers were caught on the city’s underground network.

Whole cities in which the algorithms are working say they have seen a decrease in crime. According to Yitu, which says it gets its figures directly from the local authorities, since the system has been implemented, pickpocketing on Xiamen’s city buses has fallen by 30 per cent; 500 criminal cases have been resolved by AI in Suzhou since June 2015; and police arrested nine suspects identified by algorithms during the 2016 G20 summit in Hangzhou.

“Chinese authorities are collecting and centralising ever more information about hundreds of millions of ordinary people, identifying persons who deviate from what they determine to be ‘normal thought’ and then surveilling them,” says Sophie Richardson, China director at HRW.

Research and advocacy group Human Rights Watch (HRW) says security systems such as those being developed by Yitu “violate privacy and target dissent”.

The NGO calls it a “police cloud” system and believes “it is designed to track and predict the activities of activists, dissidents and ethnic minorities, including those authorities say have extreme thoughts, among others”.

Zhu says, “We all discuss AI as an opportunity for humanity to advance or as a threat to it. What I believe is that we will have to redefine what it is to be human. We will have to ask ourselves what the foundations of our species are.

“At the same time, AI will allow us to explore the boundaries of human intelligence, evaluate its performance and help us understand ourselves better.”

Source: South China Morning Post

Former Facebook exec says social media is ripping apart society

Chamath Palihapitiya speaks at a Vanity Fair event in October 2016. Photo by Mike Windle/Getty Images for Vanity Fair

![]() Chamath Palihapitiya, who joined Facebook in 2007 and became its vice president for user growth, said he feels “tremendous guilt” about the company he helped make.

Chamath Palihapitiya, who joined Facebook in 2007 and became its vice president for user growth, said he feels “tremendous guilt” about the company he helped make.

“I think we have created tools that are ripping apart the social fabric of how society works”

Palihapitiya’s criticisms were aimed not only at Facebook, but the wider online ecosystem.

“The short-term, dopamine-driven feedback loops we’ve created are destroying how society works,” he said, referring to online interactions driven by “hearts, likes, thumbs-up.” “No civil discourse, no cooperation; misinformation, mistruth. And it’s not an American problem — this is not about Russians ads. This is a global problem.”

He went on to describe an incident in India where hoax messages about kidnappings shared on WhatsApp led to the lynching of seven innocent people.

“That’s what we’re dealing with,” said Palihapitiya. “And imagine taking that to the extreme, where bad actors can now manipulate large swathes of people to do anything you want. It’s just a really, really bad state of affairs.”

In his talk, Palihapitiya criticized not only Facebook, but Silicon Valley’s entire system of venture capital funding.

He said that investors pump money into “shitty, useless, idiotic companies,” rather than addressing real problems like climate change and disease.

Source: The Verge

UPDATE: FACEBOOK RESPONDS

Chamath has not been at Facebook for over six years. When Chamath was at Facebook we were focused on building new social media experiences and growing Facebook around the world. Facebook was a very different company back then and as we have grown we have realised how our responsibilities have grown too. We take our role very seriously and we are working hard to improve. We’ve done a lot of work and research with outside experts and academics to understand the effects of our service on well-being, and we’re using it to inform our product development. We are also making significant investments more in people, technology and processes, and – as Mark Zuckerberg said on the last earnings call – we are willing to reduce our profitability to make sure the right investments are made.

Source: CNBC

First Nation With a State Minister for Artificial Intelligence

On October 19, the UAE became the first nation with a government minister dedicated to AI. Yes, the UAE now has a minister for artificial intelligence.

On October 19, the UAE became the first nation with a government minister dedicated to AI. Yes, the UAE now has a minister for artificial intelligence.

“We want the UAE to become the world’s most prepared country for artificial intelligence,” UAE Vice President and Prime Minister and Ruler of Dubai His Highness Sheikh Mohammed bin Rashid Al Maktoum said during the announcement of the position.

The first person to occupy the state minister for AI post is H.E. Omar Bin Sultan Al Olama. The 27-year-old is currently the Managing Director of the World Government Summit in the Prime Minister’s Office at the Ministry of Cabinet Affairs and the Future,

“We have visionary leadership that wants to implement these technologies to serve humanity better. Ultimately, we want to make sure that we leverage that while, at the same time, overcoming the challenges that might be created by AI.” Al Olama

The UAE hopes its AI initiatives will encourage the rest of the world to really consider how our AI-powered future should look.

“AI is not negative or positive. It’s in between. The future is not going to be a black or white. As with every technology on Earth, it really depends on how we use it and how we implement it,”

“At this point, it’s really about starting conversations — beginning conversations about regulations and figuring out what needs to be implemented in order to get to where we want to be. I hope that we can work with other governments and the private sector to help in our discussions and to really increase global participation in this debate.

With regards to AI, one country can’t do everything. It’s a global effort,” Al Olama said.

Source: Futurism

Are there some things we just shouldn’t build? #AI

The prestigious Neural Information Processing Systems conference have a new topic on their agenda. Alongside the usual … concern about AI’s power.

The prestigious Neural Information Processing Systems conference have a new topic on their agenda. Alongside the usual … concern about AI’s power.

Kate Crawford … urged attendees to start considering, and finding ways to mitigate, accidental or intentional harms caused by their creations. “

“Amongst the very real excitement about what we can do there are also some really concerning problems arising”

“In domains like medicine we can’t have these models just be a black box where something goes in and you get something out but don’t know why,” says Maithra Raghu, a machine-learning researcher at Google. On Monday, she presented open-source software developed with colleagues that can reveal what a machine-learning program is paying attention to in data. It may ultimately allow a doctor to see what part of a scan or patient history led an AI assistant to make a particular diagnosis.

“If you have a diversity of perspectives and background you might be more likely to check for bias against different groups” Hanna Wallach a researcher at Microsoft

Others in Long Beach hope to make the people building AI better reflect humanity. Like computer science as a whole, machine learning skews towards the white, male, and western. A parallel technical conference called Women in Machine Learning has run alongside NIPS for a decade. This Friday sees the first Black in AI workshop, intended to create a dedicated space for people of color in the field to present their work.

Towards the end of her talk Tuesday, Crawford suggested civil disobedience could shape the uses of AI. She talked of French engineer Rene Carmille, who sabotaged tabulating machines used by the Nazis to track French Jews. And she told today’s AI engineers to consider the lines they don’t want their technology to cross. “Are there some things we just shouldn’t build?” she asked.

Source: Wired

Researchers Combat Gender and Racial Bias in Artificial Intelligence

[Timnit] Gebru, 34, joined a Microsoft Corp. team called FATE—for Fairness, Accountability, Transparency and Ethics in AI. The program was set up three years ago to ferret out biases that creep into AI data and can skew results.

[Timnit] Gebru, 34, joined a Microsoft Corp. team called FATE—for Fairness, Accountability, Transparency and Ethics in AI. The program was set up three years ago to ferret out biases that creep into AI data and can skew results.

“I started to realize that I have to start thinking about things like bias. Even my own Phd work suffers from whatever issues you’d have with dataset bias.”

Companies, government agencies and hospitals are increasingly turning to machine learning, image recognition and other AI tools to help predict everything from the credit worthiness of a loan applicant to the preferred treatment for a person suffering from cancer. The tools have big blind spots that particularly effect women and minorities.

“The worry is if we don’t get this right, we could be making wrong decisions that have critical consequences to someone’s life, health or financial stability,” says Jeannette Wing, director of Columbia University’s Data Sciences Institute.

AI also has a disconcertingly human habit of amplifying stereotypes. Phd students at the University of Virginia and University of Washington examined a public dataset of photos and found that the images of people cooking were 33 percent more likely to picture women than men. When they ran the images through an AI model, the algorithms said women were 68 percent more likely to appear in the cooking photos.

Researchers say it will probably take years to solve the bias problem.

The good news is that some of the smartest people in the world have turned their brainpower on the problem. “The field really has woken up and you are seeing some of the best computer scientists, often in concert with social scientists, writing great papers on it,” says University of Washington computer science professor Dan Weld. “There’s been a real call to arms.”

Source: Bloomberg

Artificial intelligence doesn’t have to be evil. We just have to teach it to be good

Training an AI platform on social media data, with the intent to reproduce a “human” experience, is fraught with risk. You could liken it to raising a baby on a steady diet of Fox News or CNN, with no input from its parents or social institutions. In either case, you might be breeding a monster.

Training an AI platform on social media data, with the intent to reproduce a “human” experience, is fraught with risk. You could liken it to raising a baby on a steady diet of Fox News or CNN, with no input from its parents or social institutions. In either case, you might be breeding a monster.

Ultimately, social data — alone — represents neither who we actually are nor who we should be. Deeper still, as useful as the social graph can be in providing a training set for AI, what’s missing is a sense of ethics or a moral framework to evaluate all this data. From the spectrum of human experience shared on Twitter, Facebook and other networks, which behaviors should be modeled and which should be avoided? Which actions are right and which are wrong? What’s good … and what’s evil?

Here’s where science comes up short. The answers can’t be gleaned from any social data set. The best analytical tools won’t surface them, no matter how large the sample size.

But they just might be found in the Bible. And the Koran, the Torah, the Bhagavad Gita and the Buddhist Sutras. They’re in the work of Aristotle, Plato, Confucius, Descartes and other philosophers both ancient and modern.

AI, to be effective, needs an ethical underpinning. Data alone isn’t enough. AI needs religion — a code that doesn’t change based on context or training set.

In place of parents and priests, responsibility for this ethical education will increasingly rest on frontline developers and scientists.

As emphasized by leading AI researcher Will Bridewell, it’s critical that future developers are “aware of the ethical status of their work and understand the social implications of what they develop.” He goes so far as to advocate study in Aristotle’s ethics and Buddhist ethics so they can “better track intuitions about moral and ethical behavior.”

On a deeper level, responsibility rests with the organizations that employ these developers, the industries they’re part of, the governments that regulate those industries and — in the end — us.

Source: Recode Ryan Holmes is the founder and CEO of Hootsuite.

How a half-educated tech elite delivered us into chaos

Donald Trump meeting PayPal co-founder Peter Thiel and Apple CEO Tim Cook in December last year. Photograph: Evan Vucci/AP

One of the biggest puzzles about our current predicament with fake news and the weaponisation of social media is why the folks who built this technology are so taken aback by what has happened.

We have a burgeoning genre of “OMG, what have we done?” angst coming from former Facebook and Google employees who have begun to realise that the cool stuff they worked on might have had, well, antisocial consequences.

Put simply, what Google and Facebook have built is a pair of amazingly sophisticated, computer-driven engines for extracting users’ personal information and data trails, refining them for sale to advertisers in high-speed data-trading auctions that are entirely unregulated and opaque to everyone except the companies themselves.

The purpose of this infrastructure was to enable companies to target people with carefully customised commercial messages and, as far as we know, they are pretty good at that.

It never seems to have occurred to them that their advertising engines could also be used to deliver precisely targeted ideological and political messages to voters. Hence the obvious question: how could such smart people be so stupid?

My hunch is it has something to do with their educational backgrounds. Take the Google co-founders. Sergey Brin studied mathematics and computer science. His partner, Larry Page, studied engineering and computer science. Zuckerberg dropped out of Harvard, where he was studying psychology and computer science, but seems to have been more interested in the latter.

Now mathematics, engineering and computer science are wonderful disciplines – intellectually demanding and fulfilling. And they are economically vital for any advanced society. But mastering them teaches students very little about society or history – or indeed about human nature.

As a consequence, the new masters of our universe are people who are essentially only half-educated. They have had no exposure to the humanities or the social sciences, the academic disciplines that aim to provide some understanding of how society works, of history and of the roles that beliefs, philosophies, laws, norms, religion and customs play in the evolution of human culture.

We are now beginning to see the consequences of the dominance of this half-educated elite.

Source: The Gaurdian – John Naughton is professor of the public understanding of technology at the Open University.

Artificial Intelligence researchers are “basically writing policy in code”

A discussion between OpenAI Director Shivon Zilis and AI Fund Director of Ethics and Governance Tim Hwang, and both shared perspective on AI’s progress, its public perception, and how we can help ensure its responsible development going forward.

A discussion between OpenAI Director Shivon Zilis and AI Fund Director of Ethics and Governance Tim Hwang, and both shared perspective on AI’s progress, its public perception, and how we can help ensure its responsible development going forward.

Hwang brought up the fact that artificial intelligence researchers are, in some ways, “basically writing policy in code” because of how influential the particular perspectives or biases inherent in these systems will be, and suggested that researchers could actually consciously set new cultural norms via their work.

Zilis added that the total number of people setting the tone for incredibly intelligent AI is probably “in the low thousands.”

She added that this means we likely need more crossover discussion between this community and those making policy decisions, and Hwang added that currently, there’s

“no good way for the public at large to signal” what moral choices should be made around the direction of AI development.

Zilis concluded that she has three guiding principles in terms of how she thinks about the future of responsible artificial intelligence development:

- First, the tech’s coming no matter what, so we need to figure out how to bend its arc with intent.

- Second, how do we get more people involved in the conversation?

- And finally, we need to do our best to front load the regulation and public discussion needed on the issue, since ultimately, it’s going to be a very powerful technology.

Source: TechCrunch

The idea that you had no idea any of this was happening strains my credibility

From left: Twitter’s acting general counsel Sean Edgett, Facebook’s general counsel Colin Stretch and Google’s senior vice president and general counsel Kent Walker, testify before the House Intelligence Committee on Wednesday, Nov. 1, 2017. Manuel Balce Ceneta/AP

Members of Congress confessed how difficult it was for them to even wrap their minds around how today’s Internet works — and can be abused. And for others, the hearings finally drove home the magnitude of the Big Tech platforms.

Sen. John Kennedy, R-La., marveled on Tuesday when Facebook said it could track the source of funding for all 5 million of its monthly advertisers.

“I think you do enormous good, but your power scares me,” he said.

There appears to be no quick patch for the malware afflicting America’s political life.

Over the course of three congressional hearings Tuesday and Wednesday, lawmakers fulminated, Big Tech witnesses were chastened but no decisive action appears to be in store to stop a foreign power from harnessing digital platforms to try to shape the information environment inside the United States.

Legislation offered in the Senate — assuming it passed, months or more from now — would change the calculus slightly: requiring more disclosure and transparency for political ads on Facebook and Twitter and other social platforms.

Even if it became law, however, it would not stop such ads from being sold, nor heal the deep political divisions exploited last year by foreign influence-mongers. The legislation also couldn’t stop a foreign power from using all the other weapons in its arsenal against the U.S., including cyberattacks, the deployment of human spies and others.

“Candidly, your companies know more about Americans, in many ways, than the United States government does. The idea that you had no idea any of this was happening strains my credibility,” Senate Intelligence Committee Vice Chairman Mark Warner, D.-Va.

The companies also made clear they condemn the uses of their services they’ve discovered, which they said violate their policies in many cases.

They also talked more about the scale of the Russian digital operation they’ve uncovered up to this point — which is eye-watering: Facebook general counsel Colin Stretch acknowledged that as many as 150 million Americans may have seen posts or other content linked to Russia’s influence campaign in the 2016 cycle

“There is one thing I’m certain of, and it’s this: Given the complexity of what we have seen, if anyone tells you they have figured it out, they are kidding ourselves. And we can’t afford to kid ourselves about what happened last year — and continues to happen today.” Senate Intelligence Committee Chairman Richard Burr, R-N.C.

Source: NPR

Does Even Mark Zuckerberg Know What Facebook Is?

In a statement broadcast live on Facebook on September 21 and subsequently posted to his profile page, Zuckerberg pledged to increase the resources of Facebook’s security and election-integrity teams and to work “proactively to strengthen the democratic process.”

In a statement broadcast live on Facebook on September 21 and subsequently posted to his profile page, Zuckerberg pledged to increase the resources of Facebook’s security and election-integrity teams and to work “proactively to strengthen the democratic process.”

It was an admirable commitment. But reading through it, I kept getting stuck on one line: “We have been working to ensure the integrity of the German elections this weekend,” Zuckerberg writes. It’s a comforting sentence, a statement that shows Zuckerberg and Facebook are eager to restore trust in their system.

But … it’s not the kind of language we expect from media organizations, even the largest ones. It’s the language of governments, or political parties, or NGOs. A private company, working unilaterally to ensure election integrity in a country it’s not even based in?

Facebook has grown so big, and become so totalizing, that we can’t really grasp it all at once.

Like a four-dimensional object, we catch slices of it when it passes through the three-dimensional world we recognize. In one context, it looks and acts like a television broadcaster, but in this other context, an NGO. In a recent essay for the London Review of Books, John Lanchester argued that for all its rhetoric about connecting the world, the company is ultimately built to extract data from users to sell to advertisers. This may be true, but Facebook’s business model tells us only so much about how the network shapes the world.

Between March 23, 2015, when Ted Cruz announced his candidacy, and November 2016, 128 million people in America created nearly 10 billion Facebook posts, shares, likes, and comments about the election. (For scale, 137 million people voted last year.)

In February 2016, the media theorist Clay Shirky wrote about Facebook’s effect: “Reaching and persuading even a fraction of the electorate used to be so daunting that only two national orgs” — the two major national political parties — “could do it. Now dozens can.”

It used to be if you wanted to reach hundreds of millions of voters on the right, you needed to go through the GOP Establishment. But in 2016, the number of registered Republicans was a fraction of the number of daily American Facebook users, and the cost of reaching them directly was negligible.

Tim Wu, the Columbia Law School professor

“Facebook has the same kind of attentional power [as TV networks in the 1950s], but there is not a sense of responsibility,” he said. “No constraints. No regulation. No oversight. Nothing. A bunch of algorithms, basically, designed to give people what they want to hear.”

It tends to get forgotten, but Facebook briefly ran itself in part as a democracy: Between 2009 and 2012, users were given the opportunity to vote on changes to the site’s policy. But voter participation was minuscule, and Facebook felt the scheme “incentivized the quantity of comments over their quality.” In December 2012, that mechanism was abandoned “in favor of a system that leads to more meaningful feedback and engagement.”

Facebook had grown too big, and its users too complacent, for democracy.

Source: NY Magazine

Put Humans at the Center of AI

As the director of Stanford’s AI Lab and now as a chief scientist of Google Cloud, Fei-Fei Li is helping to spur the AI revolution. But it’s a revolution that needs to include more people. She spoke with MIT Technology Review senior editor Will Knight about why everyone benefits if we emphasize the human side of the technology.

Why did you join Google?

Researching cutting-edge AI is very satisfying and rewarding, but we’re seeing this great awakening, a great moment in history. For me it’s very important to think about AI’s impact in the world, and one of the most important missions is to democratize this technology. The cloud is this gigantic computing vehicle that delivers computing services to every single industry.

What have you learned so far?

We need to be much more human-centered.

If you look at where we are in AI, I would say it’s the great triumph of pattern recognition. It is very task-focused, it lacks contextual awareness, and it lacks the kind of flexible learning that humans have.

We also want to make technology that makes humans’ lives better, our world safer, our lives more productive and better. All this requires a layer of human-level communication and collaboration.

When you are making a technology this pervasive and this important for humanity, you want it to carry the values of the entire humanity, and serve the needs of the entire humanity.

If the developers of this technology do not represent all walks of life, it is very likely that this will be a biased technology. I say this as a technologist, a researcher, and a mother. And we need to be speaking about this clearly and loudly.

Source: MIT Technology Review

DeepMind Ethics and Society hallmark of a change in attitude

The unit, called DeepMind Ethics and Society, is not the AI Ethics Board that DeepMind was promised when it agreed to be acquired by Google in 2014. That board, which was convened by January 2016, was supposed to oversee all of the company’s AI research, but nothing has been heard of it in the three-and-a-half years since the acquisition. It remains a mystery who is on it, what they discuss, or even whether it has officially met.

The unit, called DeepMind Ethics and Society, is not the AI Ethics Board that DeepMind was promised when it agreed to be acquired by Google in 2014. That board, which was convened by January 2016, was supposed to oversee all of the company’s AI research, but nothing has been heard of it in the three-and-a-half years since the acquisition. It remains a mystery who is on it, what they discuss, or even whether it has officially met.

DeepMind Ethics and Society is also not the same as DeepMind Health’s Independent Review Panel, a third body set up by the company to provide ethical oversight – in this case, of its specific operations in healthcare.

Nor is the new research unit the Partnership on Artificial Intelligence to Benefit People and Society, an external group founded in part by DeepMind and chaired by the company’s co-founder Mustafa Suleyman. That partnership, which was also co-founded by Facebook, Amazon, IBM and Microsoft, exists to “conduct research, recommend best practices, and publish research under an open licence in areas such as ethics, fairness and inclusivity”.

Nonetheless, its creation is the hallmark of a change in attitude from DeepMind over the past year, which has seen the company reassess its previously closed and secretive outlook. It is still battling a wave of bad publicity started when it partnered with the Royal Free in secret, bringing the app Streams to active use in the London hospital without being open to the public about what data was being shared and how.

The research unit also reflects an urgency on the part of many AI practitioners to get ahead of growing concerns on the part of the public about how the new technology will shape the world around us.

Source: The Guardian

Why we launched DeepMind Ethics & Society

We believe AI can be of extraordinary benefit to the world, but only if held to the highest ethical standards.

Technology is not value neutral, and technologists must take responsibility for the ethical and social impact of their work.

Technology is not value neutral, and technologists must take responsibility for the ethical and social impact of their work.

As history attests, technological innovation in itself is no guarantee of broader social progress. The development of AI creates important and complex questions. Its impact on society—and on all our lives—is not something that should be left to chance. Beneficial outcomes and protections against harms must be actively fought for and built-in from the beginning. But in a field as complex as AI, this is easier said than done.