Excited to share my journey of creating HYPER-PERSONALIZED graphs of reasoning and chain of thought PROMPTS for LLMs that began 10 years ago before it was a “thing.” Check out this video to learn more about my passion for this innovative approach:

Excited to share my journey of creating HYPER-PERSONALIZED graphs of reasoning and chain of thought PROMPTS for LLMs that began 10 years ago before it was a “thing.” Check out this video to learn more about my passion for this innovative approach:

Phil and Pam, as the Context Couple, will start a serieis of short vlogs where they will explain and show what contextual reasoning is and more importantlhy, how to develop and practice this essential life skill.

And they will explain and show how, by using contextual reasoning as a base, humans and AI can collaborate.

Please follow us on this exciting journey.

Navigating the Cognitive Age—a remarkable leap in human capability and understanding enabled by artificial intelligence. •

🤖I’m concerned that people are completely replacing THINKING with PROMPTING. What’s left is an empty-headed techno zombie commanded by its AI overlord.

The magic of the prompt is the dynamic interplay between humans and tech—in the context of a Socratic dialogue.”

As we wrote the day we started this blog in May of 2014, “This will require imprinting AI’s genome with social intelligence for human interaction. It will require wisdom-powered coding. It must begin right now.” https://www.socializingai.com/point/

We must move far beyond current AI/LLM prompts, to hyper-personalized situation specific individual prompts.

Much appreciation for the published paper ‘acknowledgements’ by Joel Janhonen, in the November 21, 2023 “AI and Ethics” journal published by Springer.

We stopped bloging on this site in 2019 as this topic was ‘early.’ Much has happened in the world of AI since then. It is time to restart.

Yaël Eisenstat is a former CIA officer, national security adviser to vice president Biden, and CSR leader at ExxonMobil. She was elections integrity operations head at Facebook from June to November 2018.

In my six months at Facebook … . I did not know anyone who intentionally wanted to incorporate bias into their work. But I also did not find anyone who actually knew what it meant to counter bias in any true and methodical way. #AI #AIbias @YaelEisenstathttps://t.co/5f1pS3AymP— Phil & Pam Lawson (@SocializingAI) February 14, 2019

Over more than a decade working as a CIA officer, I went through months of training and routine retraining on structural methods for checking assumptions and understanding cognitive biases.

It is one of the most important skills for an intelligence officer to develop. Analysts and operatives must hone the ability to test assumptions and do the uncomfortable and often time-consuming work of rigorously evaluating one’s own biases when analyzing events. They must also examine the biases of those providing information—assets, foreign governments, media, adversaries—to collectors.

While tech companies often have mandatory “managing bias” training to help with diversity and inclusion issues, I did not see any such training on the field of cognitive bias and decision making, particularly as it relates to how products and processes are built and secured.

I believe that many of my former coworkers at Facebook fundamentally want to make the world a better place. I have no doubt that they feel they are building products that have been tested and analyzed to ensure they are not perpetuating the nastiest biases.

But the company has created its own sort of insular bubble in which its employees’ perception of the world is the product of a number of biases that are engrained within the Silicon Valley tech and innovation scene

Becoming overly reliant on data—which in itself is a product of availability bias—is a huge part of the problem.

In my time at Facebook, I was frustrated by the immediate jump to “data” as the solution to all questions. That impulse often overshadowed necessary critical thinking to ensure that the information provided wasn’t tainted by issues of confirmation, pattern, or other cognitive biases.

To counter algorithmic, machine, and AI bias, human intelligence must be incorporated into solutions, as opposed to an over-reliance on so-called “pure” data.

Attempting to avoid bias without a clear understanding of what that truly means will inevitably fail.

Source: Wired

I’m an expert on trying to get the technology to work, not an expert on social policy. – Geoff Hintonhttps://t.co/VyH0iiZEbI

— Phil & Pam Lawson (@SocializingAI) December 14, 2018

WIRED: The recent boom of interest and investment in AI and machine learning means there’s more funding for research than ever. Does the rapid growth of the field also bring new challenges?

GH: One big challenge the community faces is that if you want to get a paper published in machine learning now it’s got to have a table in it, with all these different data sets across the top, and all these different methods along the side, and your method has to look like the best one. If it doesn’t look like that, it’s hard to get published. I don’t think that’s encouraging people to think about radically new ideas.

Now if you send in a paper that has a radically new idea, there’s no chance in hell it will get accepted, because it’s going to get some junior reviewer who doesn’t understand it. Or it’s going to get a senior reviewer who’s trying to review too many papers and doesn’t understand it first time round and assumes it must be nonsense.

Anything that makes the brain hurt is not going to get accepted. And I think that’s really bad.

What we should be going for, particularly in the basic science conferences, is radically new ideas. Because we know a radically new idea in the long run is going to be much more influential than a tiny improvement. That’s I think the main downside of the fact that we’ve got this inversion now, where you’ve got a few senior guys and a gazillion young guys.

“Tesla,” Stilgoe tells me, “is turning a blind eye to their drivers’ own experiments with Autopilot. People are using Autopilot irresponsibly, and Tesla are overlooking it because they are gathering data.” #ai #aiethics

The Deadly Recklessness ofhttps://t.co/wLcaqqn00h— Phil & Pam Lawson (@SocializingAI) December 14, 2018

Let’s be clear about this, because it seems to me that these companies have gotten a bit of a pass for undertaking a difficult, potentially ‘revolutionary’ technology, and because blame can appear nebulous in car crashes, or in these cases can be directed toward the humans who were supposed to be watching the road. These companies’ actions (or sometimes, lack of action) have led to loss of life and limb. In the process, they have darkened the outlook for the field in general, sapping public trust in self-driving cars and delaying the rollout of what many hope will be a life-saving technology.

But the fact is, those of us already on the road in our not-so-autonomous cars have little to no say over how we coexist with self-driving ones.

Over whether or not we’re sharing the streets with AVs running on shoddy software or overseen by less-than-alert human drivers because executives don’t want to lag in their quest to vacuum up road data or miss a sales opportunity.

Source: Gizmodo – Brian Merchant

Scholar Oscar Gandy said “rational discrimination” does not require hatred towards any class or race of people. It doesn’t even require unconscious bias to operate. “It only requires ignoring the bias that already exists” hashtag #AI #AIbias @nilofer https://t.co/V56KFNB7Mw

— Phil & Pam Lawson (@SocializingAI) December 14, 2018

Adam Galinsky of Columbia University has researched that “power and status act as self-reinforcing loops”, allowing those who have power and status to have their ideas heard and those without power to be ignored and silenced.

It’s not that the original idea is weighed and deemed unworthy but that the person bringing that new and unusual idea is deemed unworthy of being listened to.

When you are hiring to bring in new ideas or designing hackathons within your firm to unlock innovation levels within your firm, know this: you cannot do innovation and access original ideas without addressing the deep and pervasive role of bias.

Either you are doing something to explicitly dismantle the structural ways in which we limit who is allowed to have ideas, to unlock their capacity… or you are allowing the same old people to keep doing the same old things, perpetuating the status quo.

By your actions, you’re picking a side.

Source: Nilofer Merchant

… messing with elections, and the like.

– Richard Socher, Saleforce’s chief data scientist

Kathy Baxter, the architect of Ethical AI Practice at Salesforce, has a great saying: Ethics is a mindset, not a checklist. Now, it doesn’t mean you can’t have any checklists, but it means you do need to always think about the broader applications as it touches human life and informs important decisions #AI https://t.co/JemB76eFbt pic.twitter.com/IZjvGSrAdN

— Phil & Pam Lawson (@SocializingAI) December 12, 2018

Look at recommender engines — when you click a conspiracy video on a platform like YouTube, it optimizes more clicks and advertiser views and shows you crazier and crazier conspiracy theories to keep you on the platform.

And then you basically have people who become very radicalized, because anybody can put up this crazy stuff on YouTube, right? And so that is like a real issue.

Those are the things we should be talking about a lot more, because they can mess up society and make the world less stable and less democratic.

This will further destabilize Europe and the U.S., and I expect that in panic we will see #AI be used in harmful ways in light of other geopolitical crises – danah boyd a Microsoft researcher founder of the Data & Society research institute. @kavehwaddell https://t.co/zbe44xPthb pic.twitter.com/hdQuSE7DOf

— Phil & Pam Lawson (@SocializingAI) December 12, 2018

The necessity of causal models is a paradigm shift, creating friction both within statistical practice in several fields of science and with the AI juggernaut. #ai #causalreasoning @yudapearl

Why ‘Why’ Matters: “The Book of Why”https://t.co/a2A74W7xzS

— Phil & Pam Lawson (@SocializingAI) December 11, 2018

Causal diagrams are a form of optimized information compression. Causal diagrams crystalize knowledge, make it more transmissible, more accessible, and reduce evaporation of information.

The necessity for causal models is a paradigm shift that collides with the prevailing AI/ML meme of digital culture. The “causal revolution,” like all real revolutions, will be bumpy and full of friction. I think the resistance to Pearl (see “bashing statistics”, or “this book is a failure”) reflects, and is proportional to, our ‘automagical’ fantasy. And our emotional attachment to cognitive ease. It is my impression that the greater part of the resistance to ‘Why’ may come from those beguiled by the promise of AI/ML relieving us of complexity and the onus of cognitive effort. Those invested with the status quo, who identify with the prevalent ‘data-centric intelligence’ or with conventional statistical practice will also be offended. This is natural behavioral economics: bounded rationality and ‘satisficing’; and is to be expected.

Our becoming better scientists (health scientists, data scientists, computer scientists, social scientists, etc.) will not progress without ‘a push’ (extrinsic information). ‘Why’ is a cause, of progress.

We need to ask deeper, more complex questions: Who is in the room when these technologies are created, and which assumptions and worldviews are embedded in this process? How does our identity shape our experiences of AI systems? #AI #AIethics #AIbias https://t.co/MWxJ4k2ErC

— Phil & Pam Lawson (@SocializingAI) December 1, 2018

Tomorrow’s big tech companies will leverage intelligence (via AI) and control (via robots) associated with the lives of their users. In such a world, third-party entities may know more about us than we know about ourselves. Decisions will be made on our behalf and increasingly without our awareness, and those decisions won’t necessarily be in our best interests. #AI #AIEthics https://t.co/wvogj1dm5d

— Phil & Pam Lawson (@SocializingAI) November 28, 2018

we need it to do things like reasoning, learning causality, and exploring the world in order to learn and acquire information…If you have a good causal model of the world you are dealing with, you can generalize even in unfamiliar situations. #ai https://t.co/TmPZeYKfZC

— Phil & Pam Lawson (@SocializingAI) November 17, 2018

You mention causality—in other words, grasping not just patterns in data but why something happens. Why is that important, and why is it so hard?

If you have a good causal model of the world you are dealing with, you can generalize even in unfamiliar situations. That’s crucial. We humans are able to project ourselves into situations that are very different from our day-to-day experience. Machines are not, because they don’t have these causal models.

We can hand-craft them, but that’s not enough. We need machines that can discover causal models. To some extent it’s never going to be perfect. We don’t have a perfect causal model of the reality; that’s why we make a lot of mistakes. But we are much better off at doing this than other animals.

Right now, we don’t really have good algorithms for this, but I think if enough people work at it and consider it important, we will make advances.

… as Beijing began to build up speed, the United States government was slowing to a walk. After President Trump took office, the Obama-era reports on AI were relegated to an archived website.

… as Beijing began to build up speed, the United States government was slowing to a walk. After President Trump took office, the Obama-era reports on AI were relegated to an archived website.

In March 2017, Treasury secretary Steven Mnuchin said that the idea of humans losing jobs because of AI “is not even on our radar screen.” It might be a threat, he added, in “50 to 100 more years.” That same year, China committed itself to building a $150 billion AI industry by 2030.

And what’s at stake is not just the technological dominance of the United States. At a moment of great anxiety about the state of modern liberal democracy, AI in China appears to be an incredibly powerful enabler of authoritarian rule. Is the arc of the digital revolution bending toward tyranny, and is there any way to stop it?

AFTER THE END of the Cold War, conventional wisdom in the West came to be guided by two articles of faith: that liberal democracy was destined to spread across the planet, and that digital technology would be the wind at its back.

As the era of social media kicked in, the techno-optimists’ twin articles of faith looked unassailable. In 2009, during Iran’s Green Revolution, outsiders marveled at how protest organizers on Twitter circumvented the state’s media blackout. A year later, the Arab Spring toppled regimes in Tunisia and Egypt and sparked protests across the Middle East, spreading with all the virality of a social media phenomenon—because, in large part, that’s what it was.

“If you want to liberate a society, all you need is the internet,” said Wael Ghonim, an Egyptian Google executive who set up the primary Facebook group that helped galvanize dissenters in Cairo.

It didn’t take long, however, for the Arab Spring to turn into winter

…. in 2013 the military staged a successful coup. Soon thereafter, Ghonim moved to California, where he tried to set up a social media platform that would favor reason over outrage. But it was too hard to peel users away from Twitter and Facebook, and the project didn’t last long. Egypt’s military government, meanwhile, recently passed a law that allows it to wipe its critics off social media.

Of course, it’s not just in Egypt and the Middle East that things have gone sour. In a remarkably short time, the exuberance surrounding the spread of liberalism and technology has turned into a crisis of faith in both. Overall, the number of liberal democracies in the world has been in steady decline for a decade. According to Freedom House, 71 countries last year saw declines in their political rights and freedoms; only 35 saw improvements.

While the crisis of democracy has many causes, social media platforms have come to seem like a prime culprit.

Which leaves us where we are now: Rather than cheering for the way social platforms spread democracy, we are busy assessing the extent to which they corrode it.

VLADIMIR PUTIN IS a technological pioneer when it comes to cyberwarfare and disinformation. And he has an opinion about what happens next with AI: “The one who becomes the leader in this sphere will be the ruler of the world.”

It’s not hard to see the appeal for much of the world of hitching their future to China. Today, as the West grapples with stagnant wage growth and declining trust in core institutions, more Chinese people live in cities, work in middle-class jobs, drive cars, and take vacations than ever before. China’s plans for a tech-driven, privacy-invading social credit system may sound dystopian to Western ears, but it hasn’t raised much protest there.

In a recent survey by the public relations consultancy Edelman, 84 percent of Chinese respondents said they had trust in their government. In the US, only a third of people felt that way.

… for now, at least, conflicting goals, mutual suspicion, and a growing conviction that AI and other advanced technologies are a winner-take-all game are pushing the two countries’ tech sectors further apart.

A permanent cleavage will come at a steep cost and will only give techno-authoritarianism more room to grow.

Source: Wired (click to read the full article)

In the Moral Machine game, users were required to decide whether an autonomous car careened into unexpected pedestrians or animals, or swerved away from them, killing or injuring the passengers.

In the Moral Machine game, users were required to decide whether an autonomous car careened into unexpected pedestrians or animals, or swerved away from them, killing or injuring the passengers.

The scenario played out in ways that probed nine types of dilemmas, asking users to make judgements based on species, the age or gender of the pedestrians, and the number of pedestrians involved. Sometimes other factors were added. Pedestrians might be pregnant, for instance, or be obviously members of very high or very low socio-economic classes.

All up, the researchers collected 39.61 million decisions from 233 countries, dependencies, or territories.

On the positive side, there was a clear consensus on some dilemmas.

“The strongest preferences are observed for sparing humans over animals, sparing more lives, and sparing young lives,”

“Accordingly, these three preferences may be considered essential building blocks for machine ethics, or at least essential topics to be considered by policymakers.”

The four most spared characters in the game, they report, were “the baby, the little girl, the little boy, and the pregnant woman”.

So far, then, so universal, but after that divisions in decision-making started to appear and do so quite starkly. The determinants, it seems, were social, cultural and perhaps even economic.

Awad’s team noted, for instance, that there were significant differences between “individualistic cultures and collectivistic cultures” – a division that also correlated, albeit roughly, with North American and European cultures, in the former, and Asian cultures in the latter.

In individualistic cultures – “which emphasise the distinctive value of each individual” – there was an emphasis on saving a greater number of characters. In collectivistic cultures – “which emphasise the respect that is due to older members of the community” – there was a weaker emphasis on sparing the young.

Given that car-makers and models are manufactured on a global scale, with regional differences extending only to matters such as which side the steering wheel should be on and what the badge says, the finding flags a major issue for the people who will eventually have to program the behaviour of the vehicles.

Source: Cosmos

Coding must mean consciously grappling with ethical choices in addition to architecting systems that respect core human rights like privacy, he suggested.

Coding must mean consciously grappling with ethical choices in addition to architecting systems that respect core human rights like privacy, he suggested.

“Ethics, like technology, is design,”

“As we’re designing the system, we’re designing society. Ethical rules that we choose to put in that design [impact the society]… Nothing is self evident. Everything has to be put out there as something that we think we will be a good idea as a component of our society.”

If your tech philosophy is the equivalent of ‘move fast and break things’ it’s a failure of both imagination and innovation to not also keep rethinking policies and terms of service — “to a certain extent from scratch” — to account for fresh social impacts, he argued in the speech.

He described today’s digital platforms as “sociotechnical systems” — meaning “it’s not just about the technology when you click on the link it is about the motivation someone has to make such a great thing because then they are read and the excitement they get just knowing that other people are reading the things that they have written”.

“We must consciously decide on both of these, both the social side and the technical side,”

“[These platforms are] anthropogenic, made by people … Facebook and Twitter are anthropogenic. They’re made by people. They’ve coded by people. And the people who code them are constantly trying to figure out how to make them better.”

Source: Techcrunch

First, the site’s artificial intelligence (AI) chooses a story based on what’s popular on the internet right now. Once it picks a topic, it looks at more than a thousand news sources to gather details. Left-leaning sites, right-leaning sites – the AI looks at them all.

First, the site’s artificial intelligence (AI) chooses a story based on what’s popular on the internet right now. Once it picks a topic, it looks at more than a thousand news sources to gather details. Left-leaning sites, right-leaning sites – the AI looks at them all.

Then, the AI writes its own “impartial” version of the story based on what it finds (sometimes in as little as 60 seconds). This take on the news contains the most basic facts, with the AI striving to remove any potential bias. The AI also takes into account the “trustworthiness” of each source, something Knowhere’s co-founders preemptively determined. This ensures a site with a stellar reputation for accuracy isn’t overshadowed by one that plays a little fast and loose with the facts.

For some of the more political stories, the AI produces two additional versions labeled “Left” and “Right.” Those skew pretty much exactly how you’d expect from their headlines:

Some controversial but not necessarily political stories receive “Positive” and “Negative” spins:

Even the images used with the stories occasionally reflect the content’s bias. The “Positive” Facebook story features CEO Mark Zuckerberg grinning, while the “Negative” one has him looking like his dog just died.

So, impartial stories written by AI. Pretty neat? Sure. But society changing? We’ll probably need more than a clever algorithm for that.

Source: Futurism

When it comes to creating safe AI and regulating this technology, these great minds have little clue what they’re doing. They don’t even know where to begin.

I met with Michael Page, the Policy and Ethics Advisor at OpenAI.

Beneath the glittering skyscrapers of the self-proclaimed “city of the future,” he told me of the uncertainty that he faces. He spoke of the questions that don’t have answers, and the fantastically high price we’ll pay if we don’t find them.

The conversation began when I asked Page about his role at OpenAI. He responded that his job is to “look at the long-term policy implications of advanced AI.” If you think that this seems a little intangible and poorly defined, you aren’t the only one. I asked Page what that means, practically speaking. He was frank in his answer: “I’m still trying to figure that out.”

Page attempted to paint a better picture of the current state of affairs by noting that, since true artificial intelligence doesn’t actually exist yet, his job is a little more difficult than ordinary.

He noted that, when policy experts consider how to protect the world from AI, they are really trying to predict the future.

They are trying to, as he put it, “find the failure modes … find if there are courses that we could take today that might put us in a position that we can’t get out of.” In short, these policy experts are trying to safeguard the world of tomorrow by anticipating issues and acting today.

The problem is that they may be faced with an impossible task.

Page is fully aware of this uncomfortable possibility, and readily admits it. “I want to figure out what can we do today, if anything. It could be that the future is so uncertain there’s nothing we can do,” he said.

asked for a concrete prediction of where humanity and AI will together be in a year, or in five years, Page didn’t offer false hope: “I have no idea,”

However, Page and OpenAI aren’t alone in working on finding the solutions. He therefore hopes such solutions may be forthcoming: “Hopefully, in a year, I’ll have an answer. Hopefully, in five years, there will be thousands of people thinking about this,” Page said.

Source: Futurism

“Fifteen years ago, when we were coming here to Austin to talk about the internet, it was this magical place that was different from the rest of the world,” said Ev Williams, now the CEO of Medium, at a panel over the weekend.

“Fifteen years ago, when we were coming here to Austin to talk about the internet, it was this magical place that was different from the rest of the world,” said Ev Williams, now the CEO of Medium, at a panel over the weekend.

“It was a subset” of the general population, he said, “and everyone was cool. There were some spammers, but that was kind of it. And now it just reflects the world.” He continued: “When we built Twitter, we weren’t thinking about these things. We laid down fundamental architectures that had assumptions that didn’t account for bad behavior. And now we’re catching on to that.”

Questions about the unintended consequences of social networks pervaded this year’s event. Academics, business leaders, and Facebook executives weighed in on how social platforms spread misinformation, encourage polarization, and promote hate speech.

The idea that the architects of our social networks would face their comeuppance in Austin was once all but unimaginable at SXSW, which is credited with launching Twitter, Foursquare, and Meerkat to prominence.

But this year, the festival’s focus turned to what social apps had wrought — to what Chris Zappone, a who covers Russian influence campaigns at Australian newspaper The Age, called at his panel “essentially a national emergency.”

Steve Huffman, the CEO of Reddit discouraged strong intervention from the government. “The foundation of the United States and the First Amendment is really solid,” Huffman said. “We’re going through a very difficult time. And as I mentioned before, our values are being tested. But that’s how you know they’re values. It’s very important that we stand by our values and don’t try to overcorrect.”

Sen. Mark Warner (D-VA), vice chairman of the Senate Select Committee on intelligence, echoed that sentiment. “We’re going to need their cooperation because if not, and you simply leave this to Washington, we’ll probably mess it up,” he said at a panel that, he noted with great disappointment, took place in a room that was more than half empty. “It needs to be more of a collaborative process. But the notion that this is going to go away just isn’t accurate.”

Nearly everyone I heard speak on the subject of propaganda this week said something like “there are no easy answers” to the information crisis.

And if there is one thing that hasn’t changed about SXSW, it was that: a sense that tech would prevail in the end.

“It would also be naive to say we can’t do anything about it,” Ev Williams said. “We’re just in the early days of trying to do something about it.”

Source: The Verge – Casey Newton

In the hours since the news of his death broke, fans have been resurfacing some of their favorite quotes of his, including those from his Reddit AMA two years ago.

He wrote confidently about the imminent development of human-level AI and warned people to prepare for its consequences:

“When it eventually does occur, it’s likely to be either the best or worst thing ever to happen to humanity, so there’s huge value in getting it right.”

When asked if human-created AI could exceed our own intelligence, he replied:

It’s clearly possible for a something to acquire higher intelligence than its ancestors: we evolved to be smarter than our ape-like ancestors, and Einstein was smarter than his parents. The line you ask about is where an AI becomes better than humans at AI design, so that it can recursively improve itself without human help. If this happens, we may face an intelligence explosion that ultimately results in machines whose intelligence exceeds ours by more than ours exceeds that of snails.

As for whether that same AI could potentially be a threat to humans one day?

“AI will probably develop a drive to survive and acquire more resources as a step toward accomplishing whatever goal it has, because surviving and having more resources will increase its chances of accomplishing that other goal,” he wrote. “This can cause problems for humans whose resources get taken away.”

Source: Cosmopolitan

In the forward to Microsoft’s recent book, The Future Computed, executives Brad Smith and Harry Shum proposed that Artificial Intelligence (AI) practitioners highlight their ethical commitments by taking an oath analogous to the Hippocratic Oath sworn by doctors for generations.

In the past, much power and responsibility over life and death was concentrated in the hands of doctors.

Now, this ethical burden is increasingly shared by the builders of AI software.

Future AI advances in medicine, transportation, manufacturing, robotics, simulation, augmented reality, virtual reality, military applications, dictate that AI be developed from a higher moral ground today.

In response, I (Oren Etzioni) edited the modern version of the medical oath to address the key ethical challenges that AI researchers and engineers face …

The oath is as follows:

I swear to fulfill, to the best of my ability and judgment, this covenant:

I will respect the hard-won scientific gains of those scientists and engineers in whose steps I walk, and gladly share such knowledge as is mine with those who are to follow.

I will apply, for the benefit of the humanity, all measures required, avoiding those twin traps of over-optimism and uniformed pessimism.

I will remember that there is an art to AI as well as science, and that human concerns outweigh technological ones.

Most especially must I tread with care in matters of life and death. If it is given me to save a life using AI, all thanks. But it may also be within AI’s power to take a life; this awesome responsibility must be faced with great humbleness and awareness of my own frailty and the limitations of AI. Above all, I must not play at God nor let my technology do so.

I will respect the privacy of humans for their personal data are not disclosed to AI systems so that the world may know.

I will consider the impact of my work on fairness both in perpetuating historical biases, which is caused by the blind extrapolation from past data to future predictions, and in creating new conditions that increase economic or other inequality.

My AI will prevent harm whenever it can, for prevention is preferable to cure.

My AI will seek to collaborate with people for the greater good, rather than usurp the human role and supplant them.

I will remember that I am not encountering dry data, mere zeros and ones, but human beings, whose interactions with my AI software may affect the person’s freedom, family, or economic stability. My responsibility includes these related problems.

I will remember that I remain a member of society, with special obligations to all my fellow human beings.

Source: TechCrunch – Oren Etzioni

For a field that was not well known outside of academia a decade ago, artificial intelligence has grown dizzyingly fast.

Tech companies from Silicon Valley to Beijing are betting everything on it, venture capitalists are pouring billions into research and development, and start-ups are being created on what seems like a daily basis. If our era is the next Industrial Revolution, as many claim, A.I. is surely one of its driving forces.

I worry, however, that enthusiasm for A.I. is preventing us from reckoning with its looming effects on society. Despite its name, there is nothing “artificial” about this technology — it is made by humans, intended to behave like humans and affects humans. So if we want it to play a positive role in tomorrow’s world, it must be guided by human concerns.

I call this approach “human-centered A.I.” It consists of three goals that can help responsibly guide the development of intelligent machines.

No technology is more reflective of its creators than A.I. It has been said that there are no “machine” values at all, in fact; machine values are human values.

A human-centered approach to A.I. means these machines don’t have to be our competitors, but partners in securing our well-being. However autonomous our technology becomes, its impact on the world — for better or worse — will always be our responsibility.

Fei-Fei Li is a professor of computer science at Stanford, where she directs the Stanford Artificial Intelligence Lab, and the chief scientist for A.I. research at Google Cloud.

Source: NYT

Twitter launched a new initiative Thursday to find out exactly what it means to be a healthy social network in 2018.

Twitter launched a new initiative Thursday to find out exactly what it means to be a healthy social network in 2018.

The company, which has been plagued by a number of election-meddling, harassment, bot, and scam-related scandals since the 2016 presidential election, announced that it was looking to partner with outside experts to help “identify how we measure the health of Twitter.”

The company said it was looking to find new ways to fight abuse and spam, and to encourage “healthy” debates and conversations.

Twitter is now inviting experts to help define “what health means for Twitter” by submitting proposals for studies.

Source: Wired

Falsehoods almost always beat out the truth on Twitter, penetrating further, faster, and deeper into the social network than accurate information.

The massive new study analyzes every major contested news story in English across the span of Twitter’s existence—some 126,000 stories, tweeted by 3 million users, over more than 10 years—and finds

that the truth simply cannot compete with hoax and rumor.

By every common metric, falsehood consistently dominates the truth on Twitter, the study finds: Fake news and false rumors reach more people, penetrate deeper into the social network, and spread much faster than accurate stories.

their work has implications for Facebook, YouTube, and every major social network. Any platform that regularly amplifies engaging or provocative content runs the risk of amplifying fake news along with it.

Twitter users seem almost to prefer sharing falsehoods. Even when the researchers controlled for every difference between the accounts originating rumors—like whether that person had more followers or was verified—falsehoods were still 70 percent more likely to get retweeted than accurate news.

In short, social media seems to systematically amplify falsehood at the expense of the truth, and no one—neither experts nor politicians nor tech companies—knows how to reverse that trend.

It is a dangerous moment for any system of government premised on a common public reality.

Source: The Atlantic

In the summer of 2017, a now infamous memo came to light. Written by James Damore, then an engineer at Google, it claimed that the under-representation of women in tech was partly caused by inherent biological differences between men and women.

That Google memo is an extreme example of an imbalance in how different ways of knowing are valued.

Silicon Valley tech companies draw on innovative technical theory but have yet to really incorporate advances in social theory.

Social theorists in fields such as sociology, geography, and science and technology studies have shown how race, gender and class biases inform technical design.

So there’s irony in the fact that employees hold sexist and racist attitudes, yet ‘we are supposed to believe that these same employees are developing “neutral” or “objective” decision-making tools’, as the communications scholar Safiya Umoja Noble at the University of Southern California argues in her book Algorithms of Oppression (2018).

If tech companies are serious about building a better society, and aren’t just paying lip service to justice for their own gain, they must attend more closely to social theory.

If social insights were easy, and if practice followed readily from understanding, then racism, poverty and other debilitating systems of power and inequality would be a thing of the past.

New insights about society are as challenging to produce as the most rarified scientific theorems – and addressing pressing contemporary problems requires as many kinds of knowers and ways of knowing as possible.

Source: aeon

A year ago this past Friday, Mark Zuckerberg published a lengthy post titled “Building a Global Community.” It offered a comprehensive statement from the Facebook CEO on how he planned to move the company away from its longtime mission of making the world “more open and connected” to instead create “the social infrastructure … to build a global community.”

“Social media is a short-form medium where resonant messages get amplified many times,” Zuckerberg wrote. “This rewards simplicity and discourages nuance. At its best, this focuses messages and exposes people to different ideas. At its worst, it oversimplifies important topics and pushes us towards extremes.”

By that standard, Robert Mueller’s indictment of of a Russian troll farm last week showed social media at its worst.

Facebook has estimated that 126 million users saw Russian disinformation on the platform during the 2016 campaign. The effects of that disinformation went beyond likes, comments, and shares. Coordinating with unwitting Americans through social media platforms, Russians staged rallies and paid Americans to participate in them. In one case, they hired Americans to build a cage on a flatbed truck and dress up in a Hillary Clinton costume to promote the idea that she should be put in jail.

Russians spent thousands of dollars a month promoting those groups on Facebook and other sites, according to the indictment. They meticulously tracked the growth of their audience, creating and distributing reports on their growing influence. They worked to make their posts seem more authentically American, and to create posts more likely to spread virally through the mechanisms of the social networks.

the dark side of “developing the social infrastructure for community” is now all too visible.

The tools that are so useful for organizing a parenting group are just as effective at coercing large groups of Americans into yelling at each other. Facebook dreams of serving one global community, when in fact it serves — and enables —countless agitated tribes.

The more Facebook pushes us into groups, the more it risks encouraging the kind of polarization that Russia so eagerly exploited.

Source: The Verge

Belgian Ian Frejean, 11, walks with “Zora” the robot, a humanoid robot designed to entertain patients and to support care providers, at AZ Damiaan hospital in Ostend, Belgium

As autonomous and intelligent systems become more and more ubiquitous and sophisticated, developers and users face an important question:

How do we ensure that when these technologies are in a position to make a decision, they make the right decision — the ethically right decision?

It’s a complicated question. And there’s not one single right answer.

But there is one thing that people who work in the budding field of AI ethics seem to agree on.

“I think there is a domination of Western philosophy, so to speak, in AI ethics,” said Dr. Pak-Hang Wong, who studies Philosophy of Technology and Ethics at the University of Hamburg, in Germany. “By that I mean, when we look at AI ethics, most likely they are appealing to values … in the Western philosophical traditions, such as value of freedom, autonomy and so on.”

Wong is among a group of researchers trying to widen that scope, by looking at how non-Western value systems — including Confucianism, Buddhism and Ubuntu — can influence how autonomous and intelligent designs are developed and how they operate.

“We’re providing standards as a starting place. And then from there, it may be a matter of each tradition, each culture, different governments, establishing their own creation based on the standards that we are providing.”

Jared Bielby, who heads the Classical Ethics committee

Source: PRI

A Cambodian opposition leader has filed a petition in a California court against Facebook, demanding the company disclose its transactions with his country’s authoritarian prime minister, whom he accuses of falsely inflating his popularity through purchased “likes” and spreading fake news.

The petition, filed Feb. 8, brings the ongoing debate over Facebook’s power to undermine democracies into a legal setting.

[The petitioner, Sam Rainsy] alleges that Hun had used “click farms” to artificially boost his popularity, effectively buying “likes.”

The petition says that Hun had achieved astonishing Facebook fame in a very short time, raising questions about whether this popularity was legitimate. For instance, the petition says, Hun Sen’s page is “liked” by 9.4 million people “even though only 4.8 million Cambodians use Facebook,” and that millions of these “likes” come from India, the Philippines, Brazil, and Myanmar, countries that don’t speak Khmer, the sole language the page is written in, and that are known for “click farms.”

According to leaked correspondence that the petition refers to, the Cambodian government’s payments to Facebook totaled $15,000 a day “in generating fake ‘likes’ and advertising on the network to help dissiminate[sic] the regime’s propaganda and drown-out any competing voices.”

Source: Quartz

Artificial intelligence still has a long way to go, says IBM’s head of AI, Alexander Gray

“The biggest misconception is that we have it. I wouldn’t even call it AI. I would say it’s right to call the field AI, we’re pursuing AI, but we don’t have it yet,” he said.

“No matter how you look at it, there’s a lot of handcrafting [involved]. We have ever increasingly powerful tools but we haven’t made the leap yet,”

According to Gray, we’re only seeing “human-level performance” for narrowly defined tasks. Most machine learning-based algorithms have to analyze thousands of examples, and haven’t achieved the idea of one-shot or few-shot learnings.

“Once you go slightly beyond that data set and it looks different. Humans win. There will always be things that humans can do that AI can’t do. You still need human data scientists to do the data preparation part — lots of blood and guts stuff that requires open domain knowledge about the world,” he said.

Gray said while we may not be experiencing the full effects of AI yet, it’s going to happen a lot faster than we think — and that’s where the fear comes in.

“Everything moves on an exponential curve. I really do believe that we will start to see entire classes of jobs getting impacted.

My fear is that we won’t have the social structures and agreements on what we should do to keep pace with that. I’m not sure if that makes me optimistic or pessimistic.”

Source: Yahoo

This week my colleague Dieter Vanderelst presented our paper: “The Dark Side of Ethical Robots” at AIES 2018 in New Orleans.

This week my colleague Dieter Vanderelst presented our paper: “The Dark Side of Ethical Robots” at AIES 2018 in New Orleans.

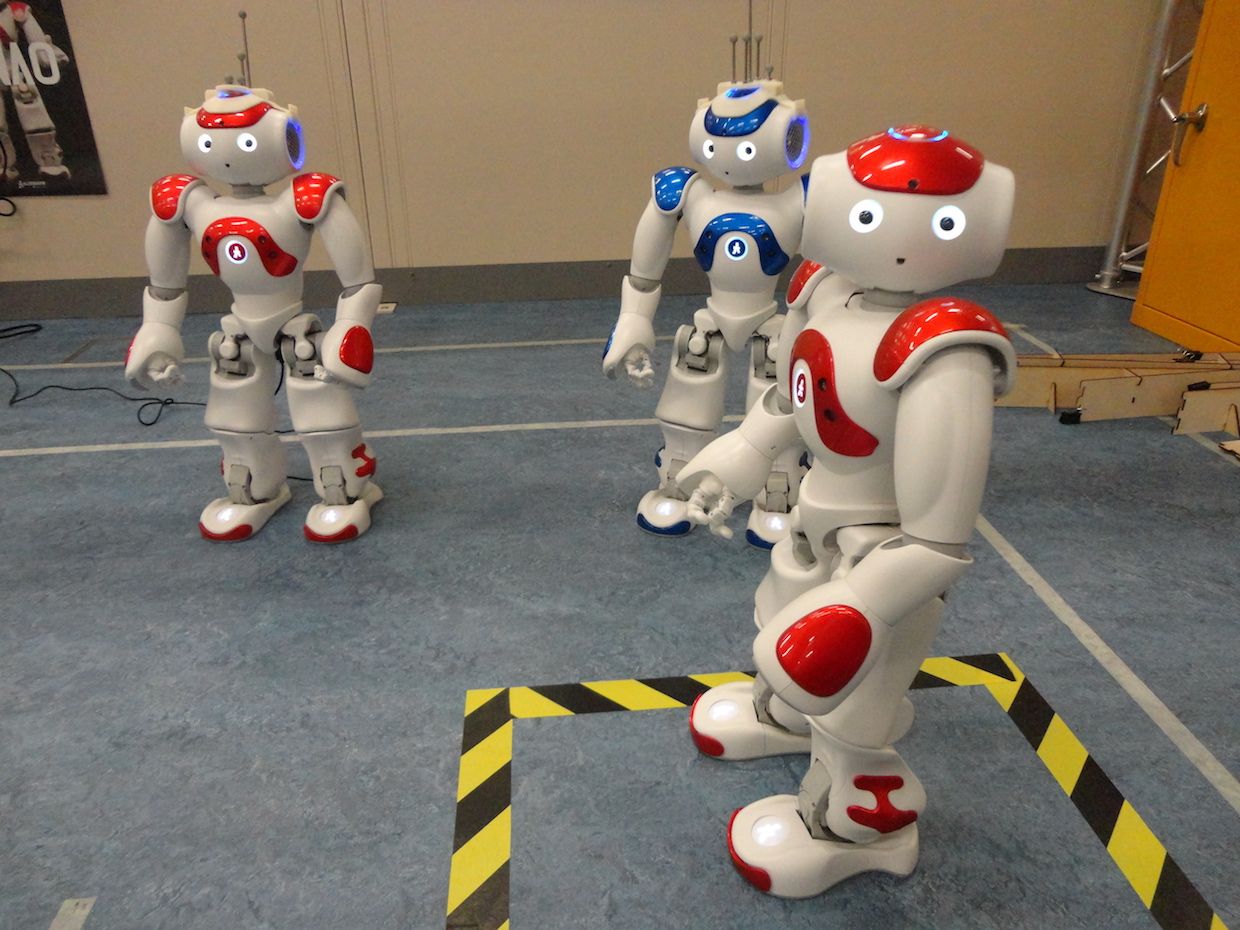

I blogged about Dieter’s very elegant experiment here, but let me summarize. With two NAO robots he set up a demonstration of an ethical robot helping another robot acting as a proxy human, then showed that with a very simple alteration of the ethical robot’s logic it is transformed into a distinctly unethical robot—behaving either competitively or aggressively toward the proxy human.

Here are our paper’s key conclusions:

The ease of transformation from ethical to unethical robot is hardly surprising. It is a straightforward consequence of the fact that both ethical and unethical behaviors require the same cognitive machinery with—in our implementation—only a subtle difference in the way a single value is calculated. In fact, the difference between an ethical (i.e. seeking the most desirable outcomes for the human) robot and an aggressive (i.e. seeking the least desirable outcomes for the human) robot is a simple negation of this value.

Let us examine the risks associated with ethical robots and if, and how, they might be mitigated. There are three.

It is very clear that guaranteeing the security of ethical robots is beyond the scope of engineering and will need regulatory and legislative efforts.

Considering the ethical, legal and societal implications of robots, it becomes obvious that robots themselves are not where responsibility lies. Robots are simply smart machines of various kinds and the responsibility to ensure they behave well must always lie with human beings. In other words, we require ethical governance, and this is equally true for robots with or without explicit ethical behaviors.

Two years ago I thought the benefits of ethical robots outweighed the risks. Now I’m not so sure.

I now believe that – even with strong ethical governance—the risks that a robot’s ethics might be compromised by unscrupulous actors are so great as to raise very serious doubts over the wisdom of embedding ethical decision making in real-world safety critical robots, such as driverless cars. Ethical robots might not be such a good idea after all.

Thus, even though we’re calling into question the wisdom of explicitly ethical robots, that doesn’t change the fact that we absolutely must design all robots to minimize the likelihood of ethical harms, in other words we should be designing implicitly ethical robots within Moor’s schema.

Source: IEEE

Jim Steyer, left, and Tristan Harris in Common Sense’s headquarters. Common Sense is helping fund the The Truth About Tech campaign. Peter Prato for The New York Times

A group of Silicon Valley technologists who were early employees at Facebook and Google, alarmed over the ill effects of social networks and smartphones, are banding togethe to challenge the companies they helped build.

The cohort is creating a union of concerned experts called the Center for Humane Technology. Along with the nonprofit media watchdog group Common Sense Media, it also plans an anti-tech addiction lobbying effort and an ad campaign at 55,000 public schools in the United States.

The campaign, titled The Truth About Tech

“We were on the inside,” said Tristan Harris, a former in-house ethicist at Google who is heading the new group. “We know what the companies measure. We know how they talk, and we know how the engineering works.”

An unprecedented alliance of former employees of some of today’s biggest tech companies. Apart from Mr. Harris, the center includes Sandy Parakilas, a former Facebook operations manager; Lynn Fox, a former Apple and Google communications executive; Dave Morin, a former Facebook executive; Justin Rosenstein, who created Facebook’s Like button and is a co-founder of Asana; Roger McNamee, an early investor in Facebook; and Renée DiResta, technologist who studies bots.

“Facebook appeals to your lizard brain — primarily fear and anger. And with smartphones, they’ve got you for every waking moment. This is an opportunity for me to correct a wrong.” Roger McNamee, an early investor in Facebook

Source: NYT

Edits from a Microsoft podcast with Dr. Ece Kamar, a senior researcher in the Adaptive Systems and Interaction Group at Microsoft Research.

I’m very interested in the complementarity between machine intelligence and human intelligence and what kind of value can be generated from using both of them to make daily life better.

We try to build systems that can interact with people, that can work with people and that can be beneficial for people. Our group has a big human component, so we care about modelling the human side. And we also work on machine-learning decision-making algorithms that can make decisions appropriately for the domain they were designed for.

My main area is the intersection between humans and AI.

we are actually at an important point in the history of AI where a lot of critical AI systems are entering the real world and starting to interact with people. So, we are at this inflection point where, whatever AI does, and the way we build AI, have consequences for the society we live in.

So, let’s look for what can augment human intelligence, what can make human intelligence better.” And that’s what my research focuses on. I really look for the complementarity in intelligences, and building these experience that can, in the future, hopefully, create super-human experiences.

So, a lot of the work I do focuses on two big parts: one is how we can build AI systems that can provide value for humans in their daily tasks and making them better. But also thinking about how humans may complement AI systems.

And when we look at our AI practices, it is actually very data-dependent these days … However, data collection is not a real science. We have our insights, we have our assumptions and we do data collection that way. And that data is not always the perfect representation of the world. This creates blind spots. When our data is not the right representation of the world and it’s not representing everything we care about, then our models cannot learn about some of the important things.

“AI is developed by people, with people, for people.”

And when I talk about building AI for people, a lot of the systems we care about are human-driven. We want to be useful for human.

We are thinking about AI algorithms that can bias their decisions based on race, gender, age. They can impact society and there are a lot of areas like judicial decision-making that touches law. And also, for every vertical, we are building these systems and I think we should be working with the domain experts from these verticals. We need to talk to educators. We need to talk to doctors. We need to talk to people who understand what that domain means and all the special considerations we should be careful about.

So, I think if we can understand what this complementary means, and then build AI that can use the power of AI to complement what humans are good at and support them in things that they want to spend time on, I think that is the beautiful future I foresee from the collaboration of humans and machines.

Source: Microsoft Research Podcast

On Wednesday, Facebook announced that its recent overhaul of the News Feed algorithm caused users to collectively spend 50 million fewer hours per day on the service. Another worrying statistic: Facebook reported that daily active users fell in the US and Canada for the first time.

But Facebook also reported impressive fourth-quarter results despite the changes, which are designed to weed out content from media publishers and brand pages and instead promote posts that spur “meaningful” engagement like comments, rather than likes and shares.

On the earnings call Wednesday, the messaging from Facebook’s management was clear:

Decreased usage might actually be a good thing, leading to better ads with higher margins. It’s also good news for Facebook’s video product, Watch, which features high-quality videos produced by traditional media companies and Facebook itself.

“By focusing on meaningful interaction, I expect the time we all spend on Facebook will be more valuable. I always believe that if we do the right thing, and deliver deeper value, our community and our business will be stronger over the long term.” Mark Zuckerber

Facebook may be facing a reckoning for its role and influence on politics, media, and social well being, but Wall Street seems to be ignoring all that for now.

Source: Business Insider

“But it is a test that I am confident we can meet”

Thereas May, Prime Minister UK

The prime minister is to say she wants the UK to lead the world in deciding how artificial intelligence can be deployed in a safe and ethical manner.

Theresa May will say at the World Economic Forum in Davos that a new advisory body, previously announced in the Autumn Budget, will co-ordinate efforts with other countries.

In addition, she will confirm that the UK will join the Davos forum’s own council on artificial intelligence.

Earlier this week, Google picked France as the base for a new research centre dedicated to exploring how AI can be applied to health and the environment.

Facebook also announced it was doubling the size of its existing AI lab in Paris, while software firm SAP committed itself to a 2bn euro ($2.5bn; £1.7bn) investment into the country that will include work on machine learning.

Meanwhile, a report released last month by the Eurasia Group consultancy suggested that the US and China are engaged in a “two-way race for AI dominance”.

It predicted Beijing would take the lead thanks to the “insurmountable” advantage of offering its companies more flexibility in how they use data about its citizens.

she is expected to say that the UK is recognised as first in the world for its preparedness to “bring artificial intelligence into government”.

Source: BBC

Google CEO Sundar Pichai believes artificial intelligence could have “more profound” implications for humanity than electricity or fire, according to recent comments.

Google CEO Sundar Pichai believes artificial intelligence could have “more profound” implications for humanity than electricity or fire, according to recent comments.

Pichai also warned that the development of artificial intelligence could pose as much risk as that of fire if its potential is not harnessed correctly.

“AI is one of the most important things humanity is working on” Pichai said in an interview with MSNBC and Recode

“My point is AI is really important, but we have to be concerned about it,” Pichai said. “It’s fair to be worried about it—I wouldn’t say we’re just being optimistic about it— we want to be thoughtful about it. AI holds the potential for some of the biggest advances we’re going to see.”

Source: Newsweek

Humanity faces a wide range of challenges that are characterised by extreme complexity

… the successful integration of AI technologies into our social and economic world creates its own challenges. They could either help overcome economic inequality or they could worsen it if the benefits are not distributed widely.

They could shine a light on damaging human biases and help society address them, or entrench patterns of discrimination and perpetuate them. Getting things right requires serious research into the social consequences of AI and the creation of partnerships to ensure it works for the public good.

This is why I predict the study of the ethics, safety and societal impact of AI is going to become one of the most pressing areas of enquiry over the coming year.

It won’t be easy: the technology sector often falls into reductionist ways of thinking, replacing complex value judgments with a focus on simple metrics that can be tracked and optimised over time.

There has already been valuable work done in this area. For example, there is an emerging consensus that it is the responsibility of those developing new technologies to help address the effects of inequality, injustice and bias. In 2018, we’re going to see many more groups start to address these issues.

Of course, it’s far simpler to count likes than to understand what it actually means to be liked and the effect this has on confidence or self-esteem.

Progress in this area also requires the creation of new mechanisms for decision-making and voicing that include the public directly. This would be a radical change for a sector that has often preferred to resolve problems unilaterally – or leave others to deal with them.

We need to do the hard, practical and messy work of finding out what ethical AI really means. If we manage to get AI to work for people and the planet, then the effects could be transformational. Right now, there’s everything to play for.

Source: Wired

DeepMind made this announcement Oct 2017

Google-owned DeepMind has announced the formation of a major new AI research unit comprised of full-time staff and external advisors

As we hand over more of our lives to artificial intelligence systems, keeping a firm grip on their ethical and societal impact is crucial.

DeepMind Ethics & Society (DMES), a unit comprised of both full-time DeepMind employees and external fellows, is the company’s latest attempt to scrutinise the societal impacts of the technologies it creates.

DMES will work alongside technologists within DeepMind and fund external research based on six areas: privacy transparency and fairness; economic impacts; governance and accountability; managing AI risk; AI morality and values; and how AI can address the world’s challenges.

Its aim, according to DeepMind, is twofold: to help technologists understand the ethical implications of their work and help society decide how AI can be beneficial.

“We want these systems in production to be our highest collective selves. We want them to be most respectful of human rights, we want them to be most respectful of all the equality and civil rights laws that have been so valiantly fought for over the last sixty years.” [Mustafa Suleyman]

Source: Wired

The other night, my nine-year-old daughter (who is, of course, the most tech-savvy person in the house), introduced me to a new Amazon Alexa skill.

“Alexa, start a conversation,” she said.

We were immediately drawn into an experience with new bot, or, as the technologists would say, “conversational user interface” (CUI). It was, we were told, the recent winner in an Amazon AI competition from the University of Washington.

At first, the experience was fun, but when we chose to explore a technology topic, the bot responded, “have you heard of Net Neutrality?” What we experienced thereafter was slightly discomforting.

The bot seemingly innocuously cited a number of articles that she “had read on the web” about the FCC, Ajit Pai, and the issue of net neutrality. But here’s the thing: All four articles she recommended had a distinct and clear anti-Ajit Pai bias.

Now, the topic of Net Neutrality is a heated one and many smart people make valid points on both sides, including Fred Wilson and Ben Thompson. That is how it should be.

But the experience of the Alexa CUI should give you pause, as it did me.

To someone with limited familiarity with the topic of net neutrality, the voice seemed soothing and the information unbiased. But if you have a familiarity with the topic, you might start to wonder, “wait … am I being manipulated on this topic by an Amazon-owned AI engine to help the company achieve its own policy objectives?”

The experience highlights some of the risks of the AI-powered future into which we are hurtling at warp speed.

If you are going to trust your decision-making to a centralized AI source, you need to have 100 percent confidence in:

In a centralized, closed model of AI, you are asked to implicitly trust in each layer without knowing what is going on behind the curtains.

Welcome to the world of Blockchain+AI.

Source: Venture Beat

You see a man walking toward you on the street. He reminds you of someone from long ago. Such as a high school classmate, who belonged to the football team? Wasn’t a great player but you were fond of him then. You don’t recall him attending fifth, 10th and 20th reunions. He must have moved away and established his life there and cut off his ties to his friends here.

You look at his face and you really can’t tell if it’s Bob for sure. You had forgotten many of his key features and this man seems to have gained some weight.

The distance between the two of you is quickly closing and your mind is running at full speed trying to decide if it is Bob.

At this moment, you have a few choices. A decision tree will emerge and you will need to choose one of the available options.

In the logic diagram I show, there are some question that is influenced by the emotion. B2) “Nah, let’s forget it” and C) and D) are results of emotional decisions and have little to do with fact this may be Bob or not.

The human decision-making process is often influenced by emotion, which is often independent of fact.

You decision to drop the idea of meeting Bob after so many years is caused by shyness, laziness and/or avoiding some embarrassment in case this man is not Bob. The more you think about this decision-making process, less sure you’d become. After all, if you and Bob hadn’t spoken for 20 years, maybe we should leave the whole thing alone.

Thus, this is clearly the result of human intelligence working.

If this were artificial intelligence, chances are decisions B2, C and D wouldn’t happen. Machines today at their infantile stage of development do not know such emotional feeling as “too much trouble,” hesitation due to fear of failing (Bob says he isn’t Bob), or laziness and or “too complicated.” In some distant time, these complex feelings and deeds driven by the emotion would be realized, I hope. But, not now.

At this point of the state of art of AI, a machine would not hesitate once it makes a decision. That’s because it cannot hesitate. Hesitation is a complex emotional decision that a machine simply cannot perform.

There you see a huge crevice between the human intelligence and AI.

In fact, animals (remember we are also an animal) display complex emotional decisions daily. Now, are you getting some feeling about human intelligence and AI?

Source: Fosters.com

Shintaro “Sam” Asano was named by the Massachusetts Institute of Technology in 2011 as one of the 10 most influential inventors of the 20th century who improved our lives. He is a businessman and inventor in the field of electronics and mechanical systems who is credited as the inventor of the portable fax machine.

Shintaro “Sam” Asano was named by the Massachusetts Institute of Technology in 2011 as one of the 10 most influential inventors of the 20th century who improved our lives. He is a businessman and inventor in the field of electronics and mechanical systems who is credited as the inventor of the portable fax machine.

A few highlights from THE BUSINESS INSIDER INTERVIEW with Jaron

A few highlights from THE BUSINESS INSIDER INTERVIEW with Jaron

But that general principle — that we’re not treating people well enough with digital systems — still bothers me. I do still think that is very true.

—

Well, this is maybe the greatest tragedy in the history of computing, and it goes like this: there was a well-intentioned, sweet movement in the ‘80s to try to make everything online free. And it started with free software and then it was free music, free news, and other free services.

But, at the same time, it’s not like people were clamoring for the government to do it or some sort of socialist solution. If you say, well, we want to have entrepreneurship and capitalism, but we also want it to be free, those two things are somewhat in conflict, and there’s only one way to bridge that gap, and it’s through the advertising model.

And advertising became the model of online information, which is kind of crazy. But here’s the problem: if you start out with advertising, if you start out by saying what I’m going to do is place an ad for a car or whatever, gradually, not because of any evil plan — just because they’re trying to make their algorithms work as well as possible and maximize their shareholders value and because computers are getting faster and faster and more effective algorithms —

what starts out as advertising morphs into behavior modification.

A second issue is that people who participate in a system of this time, since everything is free since it’s all being monetized, what reward can you get? Ultimately, this system creates assholes, because if being an asshole gets you attention, that’s exactly what you’re going to do. Because there’s a bias for negative emotions to work better in engagement, because the attention economy brings out the asshole in a lot of other people, the people who want to disrupt and destroy get a lot more efficiency for their spend than the people who might be trying to build up and preserve and improve.

—

Q: What do you think about programmers using consciously addicting techniques to keep people hooked to their products?

A: There’s a long and interesting history that goes back to the 19th century, with the science of Behaviorism that arose to study living things as though they were machines.

Behaviorists had this feeling that I think might be a little like this godlike feeling that overcomes some hackers these days, where they feel totally godlike as though they have the keys to everything and can control people

—

I think our responsibility as engineers is to engineer as well as possible, and to engineer as well as possible, you have to treat the thing you’re engineering as a product.

You can’t respect it in a deified way.

It goes in the reverse. We’ve been talking about the behaviorist approach to people, and manipulating people with addictive loops as we currently do with online systems.

In this case, you’re treating people as objects.

It’s the flipside of treating machines as people, as AI does. They go together. Both of them are mistakes

Source: Read the extensive interview at Business Insider

Various computer scientists, researchers, lawyers and other techies have recently been attending bi-monthly meetings in Montreal to discuss life’s big questions — as they relate to our increasingly intelligent machines.

Various computer scientists, researchers, lawyers and other techies have recently been attending bi-monthly meetings in Montreal to discuss life’s big questions — as they relate to our increasingly intelligent machines.

Should a computer give medical advice? Is it acceptable for the legal system to use algorithms in order to decide whether convicts get paroled? Can an artificial agent that spouts racial slurs be held culpable?

And perhaps most pressing for many people: Are Facebook and other social media applications capable of knowing when a user is depressed or suffering a manic episode — and are these people being targeted with online advertisements in order to exploit them at their most vulnerable?

Researchers such as Abhishek Gupta are trying to help Montreal lead the world in ensuring AI is developed responsibly.

“The spotlight of the world is on (Montreal),” said Gupta, an AI ethics researcher at McGill University who is also a software developer in cybersecurity at Ericsson.

His bi-monthly “AI ethics meet-up” brings together people from around the city who want to influence the way researchers are thinking about machine-learning.

“In the past two months we’ve had six new AI labs open in Montreal,” Gupta said. “It makes complete sense we would also be the ones who would help guide the discussion on how to do it ethically.”

In November, Gupta and Universite de Montreal researchers helped create the Montreal Declaration for a Responsible Development of Artificial Intelligence, which is a series of principles seeking to guide the evolution of AI in the city and across the planet.

Its principles are broken down into seven themes: well-being, autonomy, justice, privacy, knowledge, democracy and responsibility.

“How do we ensure that the benefits of AI are available to everyone?” Gupta asked his group. “What types of legal decisions can we delegate to AI?”

Doina Precup, a McGill University computer science professor and the Montreal head of DeepMind … said the global industry is starting to be preoccupied with the societal consequences of machine-learning, and Canadian values encourage the discussion.

“Montreal is a little ahead because we are in Canada,” Precup said. “Canada, compared to other parts of the world, has a different set of values that are more oriented towards ensuring everybody’s wellness. The background and culture of the country and the city matter a lot.”

Source: iPolitics

As autonomous and intelligent systems become more pervasive, it is essential the designers and developers behind them stop to consider the ethical considerations of what they are unleashing.

As autonomous and intelligent systems become more pervasive, it is essential the designers and developers behind them stop to consider the ethical considerations of what they are unleashing.

That’s the view of the Institute of Electrical and Electronics Engineers (IEEE) which this week released for feedback its second Ethically Aligned Design document in an attempt

to ensure such systems “remain human-centric”.

“These systems have to behave in a way that is beneficial to people beyond reaching functional goals and addressing technical problems. This will allow for an elevated level of trust between people and technology that is needed for its fruitful, pervasive use in our daily lives,” the document states.

“Defining what exactly ‘right’ and ‘good’ are in a digital future is a question of great complexity that places us at the intersection of technology and ethics,”

“Throwing our hands up in air crying ‘it’s too hard’ while we sit back and watch technology careen us forward into a future that happens to us, rather than one we create, is hardly a viable option.

“This publication is a truly game-changing and promising first step in a direction – which has often felt long in coming – toward breaking the protective wall of specialisation that has allowed technologists to disassociate from the societal impacts of their technologies.”

“It will demand that future tech leaders begin to take responsibility for and think deeply about the non-technical impact on disempowered groups, on privacy and justice, on physical and mental health, right down to unpacking hidden biases and moral implications. It represents a positive step toward ensuring the technology we build as humans genuinely benefits us and our planet,” [University of Sydney software engineering Professor Rafael Calvo.]

“We believe explicitly aligning technology with ethical values will help advance innovation with these new tools while diminishing fear in the process” the IEEE said.

Source: Computer World

Jordi Ribas, left, and Kristina Behr, right, showcased Microsoft’s AI advances at an event Wednesday. Photo by Dan DeLong.

These days, people want more intelligent answers: Maybe they’d like to gather the pros and cons of a certain exercise plan or figure out whether the latest Marvel movie is worth seeing. They might even turn to their favorite search tool with only the vaguest of requests, such as, “I’m hungry.”

When people make requests like that, they don’t just want a list of websites. They might want a personalized answer, such as restaurant recommendations based on the city they are traveling in. Or they might want a variety of answers, so they can get different perspectives on a topic. They might even need help figuring out the right question to ask.

At a Microsoft event in San Francisco on Wednesday, Microsoft executives showcased a number of advances in its Bing search engine, Cortana intelligent assistant and Microsoft Office 365 productivity tools that use artificial intelligence to help people get more nuanced information and assist with more complex needs.

“AI has come a long way in the ability to find information, but making sense of that information is the real challenge,” said Kristina Behr, a partner design and planning program manager with Microsoft’s Artificial Intelligence and Research group.

Microsoft demonstrated some of the most recent AI-driven advances in intelligent search that are aimed at giving people richer, more useful information.

Another new, AI-driven advance in Bing is aimed at getting people multiple viewpoints on a search query that might be more subjective.

For example, if you ask Bing “is cholesterol bad,” you’ll see two different perspectives on that question.

That’s part of Microsoft’s effort to acknowledge that sometimes a question doesn’t have a clear black and white answer.

“As Bing, what we want to do is we want to provide the best results from the overall web. We want to be able to find the answers and the results that are the most comprehensive, the most relevant and the most trustworthy,” Ribas said.

“Often people are seeking answers that go beyond something that is a mathematical equation. We want to be able to frame those opinions and articulate them in a way that’s also balanced and objective.”

Source: Microsoft

This article attempts to bring our readers to Kate’s brilliant Keynote speech at NIPS 2017. It talks about different forms of bias in Machine Learning systems and the ways to tackle such problems.

This article attempts to bring our readers to Kate’s brilliant Keynote speech at NIPS 2017. It talks about different forms of bias in Machine Learning systems and the ways to tackle such problems.

The rise of Machine Learning is every bit as far reaching as the rise of computing itself.

A vast new ecosystem of techniques and infrastructure are emerging in the field of machine learning and we are just beginning to learn their full capabilities. But with the exciting things that people can do, there are some really concerning problems arising.

Forms of bias, stereotyping and unfair determination are being found in machine vision systems, object recognition models, and in natural language processing and word embeddings. High profile news stories about bias have been on the rise, from women being less likely to be shown high paying jobs to gender bias and object recognition datasets like MS COCO, to racial disparities in education AI systems.

Bias is a skew that produces a type of harm.

Commonly from Training data. It can be incomplete, biased or otherwise skewed. It can draw from non-representative samples that are wholly defined before use. Sometimes it is not obvious because it was constructed in a non-transparent way. In addition to human labeling, other ways that human biases and cultural assumptions can creep in ending up in exclusion or overrepresentation of subpopulation. Case in point: stop-and-frisk program data used as training data by an ML system. This dataset was biased due to systemic racial discrimination in policing.

Majority of the literature understand bias as harms of allocation. Allocative harm is when a system allocates or withholds certain groups, an opportunity or resource. It is an economically oriented view primarily. Eg: who gets a mortgage, loan etc.

Allocation is immediate, it is a time-bound moment of decision making. It is readily quantifiable. In other words, it raises questions of fairness and justice in discrete and specific transactions.

It gets tricky when it comes to systems that represent society but don’t allocate resources. These are representational harms. When systems reinforce the subordination of certain groups along the lines of identity like race, class, gender etc.

It is a long-term process that affects attitudes and beliefs. It is harder to formalize and track. It is a diffused depiction of humans and society. It is at the root of all of the other forms of allocative harm.

The ultimate question for fairness in machine learning is this.

Who is going to benefit from the system we are building? And who might be harmed?

Source: Datahub

Kate Crawford is a Principal Researcher at Microsoft Research and a Distinguished Research Professor at New York University. She has spent the last decade studying the social implications of data systems, machine learning, and artificial intelligence. Her recent publications address data bias and fairness, and social impacts of artificial intelligence among others.

Zhu Long, co-founder and CEO of Yitu Technology, has his identity checked at the company’s headquarters in the Hongqiao business district in Shanghai. Picture: Zigor Aldama

“Our machines can very easily recognise you among at least 2 billion people in a matter of seconds,” says chief executive and Yitu co-founder Zhu Long, “which would have been unbelievable just three years ago.”

Its platform is also in service with more than 20 provincial public security departments, and is used as part of more than 150 municipal public security systems across the country, and Dragonfly Eye has already proved its worth.