… as Beijing began to build up speed, the United States government was slowing to a walk. After President Trump took office, the Obama-era reports on AI were relegated to an archived website.

… as Beijing began to build up speed, the United States government was slowing to a walk. After President Trump took office, the Obama-era reports on AI were relegated to an archived website.

In March 2017, Treasury secretary Steven Mnuchin said that the idea of humans losing jobs because of AI “is not even on our radar screen.” It might be a threat, he added, in “50 to 100 more years.” That same year, China committed itself to building a $150 billion AI industry by 2030.

And what’s at stake is not just the technological dominance of the United States. At a moment of great anxiety about the state of modern liberal democracy, AI in China appears to be an incredibly powerful enabler of authoritarian rule. Is the arc of the digital revolution bending toward tyranny, and is there any way to stop it?

AFTER THE END of the Cold War, conventional wisdom in the West came to be guided by two articles of faith: that liberal democracy was destined to spread across the planet, and that digital technology would be the wind at its back.

As the era of social media kicked in, the techno-optimists’ twin articles of faith looked unassailable. In 2009, during Iran’s Green Revolution, outsiders marveled at how protest organizers on Twitter circumvented the state’s media blackout. A year later, the Arab Spring toppled regimes in Tunisia and Egypt and sparked protests across the Middle East, spreading with all the virality of a social media phenomenon—because, in large part, that’s what it was.

“If you want to liberate a society, all you need is the internet,” said Wael Ghonim, an Egyptian Google executive who set up the primary Facebook group that helped galvanize dissenters in Cairo.

It didn’t take long, however, for the Arab Spring to turn into winter

…. in 2013 the military staged a successful coup. Soon thereafter, Ghonim moved to California, where he tried to set up a social media platform that would favor reason over outrage. But it was too hard to peel users away from Twitter and Facebook, and the project didn’t last long. Egypt’s military government, meanwhile, recently passed a law that allows it to wipe its critics off social media.

Of course, it’s not just in Egypt and the Middle East that things have gone sour. In a remarkably short time, the exuberance surrounding the spread of liberalism and technology has turned into a crisis of faith in both. Overall, the number of liberal democracies in the world has been in steady decline for a decade. According to Freedom House, 71 countries last year saw declines in their political rights and freedoms; only 35 saw improvements.

While the crisis of democracy has many causes, social media platforms have come to seem like a prime culprit.

Which leaves us where we are now: Rather than cheering for the way social platforms spread democracy, we are busy assessing the extent to which they corrode it.

VLADIMIR PUTIN IS a technological pioneer when it comes to cyberwarfare and disinformation. And he has an opinion about what happens next with AI: “The one who becomes the leader in this sphere will be the ruler of the world.”

It’s not hard to see the appeal for much of the world of hitching their future to China. Today, as the West grapples with stagnant wage growth and declining trust in core institutions, more Chinese people live in cities, work in middle-class jobs, drive cars, and take vacations than ever before. China’s plans for a tech-driven, privacy-invading social credit system may sound dystopian to Western ears, but it hasn’t raised much protest there.

In a recent survey by the public relations consultancy Edelman, 84 percent of Chinese respondents said they had trust in their government. In the US, only a third of people felt that way.

… for now, at least, conflicting goals, mutual suspicion, and a growing conviction that AI and other advanced technologies are a winner-take-all game are pushing the two countries’ tech sectors further apart.

A permanent cleavage will come at a steep cost and will only give techno-authoritarianism more room to grow.

Source: Wired (click to read the full article)

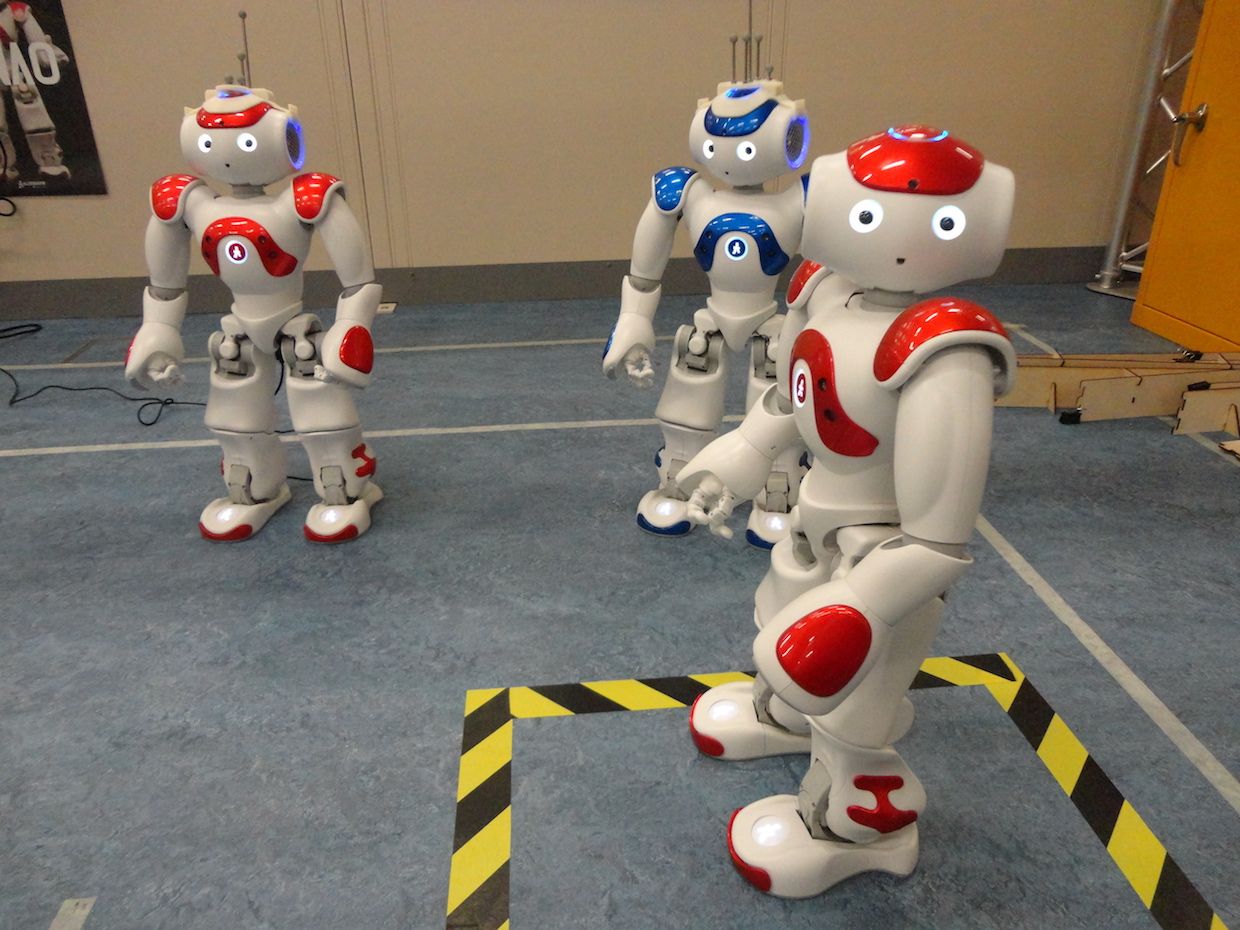

This week my colleague Dieter Vanderelst presented our paper: “The Dark Side of Ethical Robots” at AIES 2018 in New Orleans.

This week my colleague Dieter Vanderelst presented our paper: “The Dark Side of Ethical Robots” at AIES 2018 in New Orleans.