AI and Jobs

The AI Cold War That Could Doom Us All

… as Beijing began to build up speed, the United States government was slowing to a walk. After President Trump took office, the Obama-era reports on AI were relegated to an archived website.

… as Beijing began to build up speed, the United States government was slowing to a walk. After President Trump took office, the Obama-era reports on AI were relegated to an archived website.

In March 2017, Treasury secretary Steven Mnuchin said that the idea of humans losing jobs because of AI “is not even on our radar screen.” It might be a threat, he added, in “50 to 100 more years.” That same year, China committed itself to building a $150 billion AI industry by 2030.

And what’s at stake is not just the technological dominance of the United States. At a moment of great anxiety about the state of modern liberal democracy, AI in China appears to be an incredibly powerful enabler of authoritarian rule. Is the arc of the digital revolution bending toward tyranny, and is there any way to stop it?

AFTER THE END of the Cold War, conventional wisdom in the West came to be guided by two articles of faith: that liberal democracy was destined to spread across the planet, and that digital technology would be the wind at its back.

As the era of social media kicked in, the techno-optimists’ twin articles of faith looked unassailable. In 2009, during Iran’s Green Revolution, outsiders marveled at how protest organizers on Twitter circumvented the state’s media blackout. A year later, the Arab Spring toppled regimes in Tunisia and Egypt and sparked protests across the Middle East, spreading with all the virality of a social media phenomenon—because, in large part, that’s what it was.

“If you want to liberate a society, all you need is the internet,” said Wael Ghonim, an Egyptian Google executive who set up the primary Facebook group that helped galvanize dissenters in Cairo.

It didn’t take long, however, for the Arab Spring to turn into winter

…. in 2013 the military staged a successful coup. Soon thereafter, Ghonim moved to California, where he tried to set up a social media platform that would favor reason over outrage. But it was too hard to peel users away from Twitter and Facebook, and the project didn’t last long. Egypt’s military government, meanwhile, recently passed a law that allows it to wipe its critics off social media.

Of course, it’s not just in Egypt and the Middle East that things have gone sour. In a remarkably short time, the exuberance surrounding the spread of liberalism and technology has turned into a crisis of faith in both. Overall, the number of liberal democracies in the world has been in steady decline for a decade. According to Freedom House, 71 countries last year saw declines in their political rights and freedoms; only 35 saw improvements.

While the crisis of democracy has many causes, social media platforms have come to seem like a prime culprit.

Which leaves us where we are now: Rather than cheering for the way social platforms spread democracy, we are busy assessing the extent to which they corrode it.

VLADIMIR PUTIN IS a technological pioneer when it comes to cyberwarfare and disinformation. And he has an opinion about what happens next with AI: “The one who becomes the leader in this sphere will be the ruler of the world.”

It’s not hard to see the appeal for much of the world of hitching their future to China. Today, as the West grapples with stagnant wage growth and declining trust in core institutions, more Chinese people live in cities, work in middle-class jobs, drive cars, and take vacations than ever before. China’s plans for a tech-driven, privacy-invading social credit system may sound dystopian to Western ears, but it hasn’t raised much protest there.

In a recent survey by the public relations consultancy Edelman, 84 percent of Chinese respondents said they had trust in their government. In the US, only a third of people felt that way.

… for now, at least, conflicting goals, mutual suspicion, and a growing conviction that AI and other advanced technologies are a winner-take-all game are pushing the two countries’ tech sectors further apart.

A permanent cleavage will come at a steep cost and will only give techno-authoritarianism more room to grow.

Source: Wired (click to read the full article)

The biggest misconception about artificial intelligence is that we have it

Artificial intelligence still has a long way to go, says IBM’s head of AI, Alexander Gray

“The biggest misconception is that we have it. I wouldn’t even call it AI. I would say it’s right to call the field AI, we’re pursuing AI, but we don’t have it yet,” he said.

Gray says at present, humans are still sorely needed.

“No matter how you look at it, there’s a lot of handcrafting [involved]. We have ever increasingly powerful tools but we haven’t made the leap yet,”

According to Gray, we’re only seeing “human-level performance” for narrowly defined tasks. Most machine learning-based algorithms have to analyze thousands of examples, and haven’t achieved the idea of one-shot or few-shot learnings.

“Once you go slightly beyond that data set and it looks different. Humans win. There will always be things that humans can do that AI can’t do. You still need human data scientists to do the data preparation part — lots of blood and guts stuff that requires open domain knowledge about the world,” he said.

Artificial intelligence is perhaps the most hyped yet misunderstood field of study today.

Gray said while we may not be experiencing the full effects of AI yet, it’s going to happen a lot faster than we think — and that’s where the fear comes in.

“Everything moves on an exponential curve. I really do believe that we will start to see entire classes of jobs getting impacted.

My fear is that we won’t have the social structures and agreements on what we should do to keep pace with that. I’m not sure if that makes me optimistic or pessimistic.”

Source: Yahoo

What’s Bigger Than Fire and Electricity? Artificial Intelligence – Google

Google CEO Sundar Pichai believes artificial intelligence could have “more profound” implications for humanity than electricity or fire, according to recent comments.

Google CEO Sundar Pichai believes artificial intelligence could have “more profound” implications for humanity than electricity or fire, according to recent comments.

Pichai also warned that the development of artificial intelligence could pose as much risk as that of fire if its potential is not harnessed correctly.

“AI is one of the most important things humanity is working on” Pichai said in an interview with MSNBC and Recode

“My point is AI is really important, but we have to be concerned about it,” Pichai said. “It’s fair to be worried about it—I wouldn’t say we’re just being optimistic about it— we want to be thoughtful about it. AI holds the potential for some of the biggest advances we’re going to see.”

Source: Newsweek

Researchers Combat Gender and Racial Bias in Artificial Intelligence

[Timnit] Gebru, 34, joined a Microsoft Corp. team called FATE—for Fairness, Accountability, Transparency and Ethics in AI. The program was set up three years ago to ferret out biases that creep into AI data and can skew results.

[Timnit] Gebru, 34, joined a Microsoft Corp. team called FATE—for Fairness, Accountability, Transparency and Ethics in AI. The program was set up three years ago to ferret out biases that creep into AI data and can skew results.

“I started to realize that I have to start thinking about things like bias. Even my own Phd work suffers from whatever issues you’d have with dataset bias.”

Companies, government agencies and hospitals are increasingly turning to machine learning, image recognition and other AI tools to help predict everything from the credit worthiness of a loan applicant to the preferred treatment for a person suffering from cancer. The tools have big blind spots that particularly effect women and minorities.

“The worry is if we don’t get this right, we could be making wrong decisions that have critical consequences to someone’s life, health or financial stability,” says Jeannette Wing, director of Columbia University’s Data Sciences Institute.

AI also has a disconcertingly human habit of amplifying stereotypes. Phd students at the University of Virginia and University of Washington examined a public dataset of photos and found that the images of people cooking were 33 percent more likely to picture women than men. When they ran the images through an AI model, the algorithms said women were 68 percent more likely to appear in the cooking photos.

Researchers say it will probably take years to solve the bias problem.

The good news is that some of the smartest people in the world have turned their brainpower on the problem. “The field really has woken up and you are seeing some of the best computer scientists, often in concert with social scientists, writing great papers on it,” says University of Washington computer science professor Dan Weld. “There’s been a real call to arms.”

Source: Bloomberg

Siri as a therapist, Apple is seeking engineers who understand psychology

PL – Looks like Siri needs more help to understand.

PL – Looks like Siri needs more help to understand.

“People have serious conversations with Siri. People talk to Siri about all kinds of things, including when they’re having a stressful day or have something serious on their mind. They turn to Siri in emergencies or when they want guidance on living a healthier life. Does improving Siri in these areas pique your interest?

Come work as part of the Siri Domains team and make a difference.

We are looking for people passionate about the power of data and have the skills to transform data to intelligent sources that will take Siri to next level. Someone with a combination of strong programming skills and a true team player who can collaborate with engineers in several technical areas. You will thrive in a fast-paced environment with rapidly changing priorities.”

The challenge as explained by Ephrat Livni on Quartz

The position requires a unique skill set. Basically, the company is looking for a computer scientist who knows algorithms and can write complex code, but also understands human interaction, has compassion, and communicates ably, preferably in more than one language. The role also promises a singular thrill: to “play a part in the next revolution in human-computer interaction.”

The job at Apple has been up since April, so maybe it’s turned out to be a tall order to fill. Still, it shouldn’t be impossible to find people who are interested in making machines more understanding. If it is, we should probably stop asking Siri such serious questions.

Computer scientists developing artificial intelligence have long debated what it means to be human and how to make machines more compassionate. Apart from the technical difficulties, the endeavor raises ethical dilemmas, as noted in the 2012 MIT Press book Robot Ethics: The Ethical and Social Implications of Robotics.

Even if machines could be made to feel for people, it’s not clear what feelings are the right ones to make a great and kind advisor and in what combinations. A sad machine is no good, perhaps, but a real happy machine is problematic, too.

In a chapter on creating compassionate artificial intelligence (pdf), sociologist, bioethicist, and Buddhist monk James Hughes writes:

Programming too high a level of positive emotion in an artificial mind, locking it into a heavenly state of self-gratification, would also deny it the capacity for empathy with other beings’ suffering, and the nagging awareness that there is a better state of mind.

Source: Quartz

Behind the Google diversity memo furor is fear of Google’s vast opaque power

![]()

Fear of opaque power of Google in particular, and Silicon Valley in general, wields over our lives.

Fear of opaque power of Google in particular, and Silicon Valley in general, wields over our lives.

If Google — and the tech world more generally — is sexist, or in the grips of a totalitarian cult of political correctness, or a secret hotbed of alt-right reactionaries, the consequences would be profound.

Google wields a monopoly over search, one of the central technologies of our age, and, alongside Facebook, dominates the internet advertising market, making it a powerful driver of both consumer opinion and the media landscape.

It shapes the world in which we live in ways both obvious and opaque.

This is why trust matters so much in tech. It’s why Google, to attain its current status in society, had to promise, again and again, that it wouldn’t be evil.

Compounding the problem is that the tech industry’s point of view is embedded deep in the product, not announced on the packaging. Its biases are quietly built into algorithms, reflected in platform rules, expressed in code few of us can understand and fewer of us will ever read.

But what if it actually is evil? Or what if it’s not evil but just immature, unreflective, and uncompassionate? And what if that’s the culture that designs the digital services the rest of us have to use?

The technology industry’s power is vast, and the way that power is expressed is opaque, so the only real assurance you can have that your interests and needs are being considered is to be in the room when the decisions are made and the code is written. But tech as an industry is unrepresentative of the people it serves and unaccountable in the way it serves them, and so there’s very little confidence among any group that the people in the room are the right ones.

Source: Vox (read the entire article by Ezra Klein)

IBM Watson CTO on Why Augmented Intelligence Beats AI

If you look at almost every other tool that has ever been created, our tools tend to be most valuable when they’re amplifying us, when they’re extending our reach, when they’re increasing our strength, when they’re allowing us to do things that we can’t do by ourselves as human beings. That’s really the way that we need to be thinking about AI as well, and to the extent that we actually call it augmented intelligence, not artificial intelligence.

If you look at almost every other tool that has ever been created, our tools tend to be most valuable when they’re amplifying us, when they’re extending our reach, when they’re increasing our strength, when they’re allowing us to do things that we can’t do by ourselves as human beings. That’s really the way that we need to be thinking about AI as well, and to the extent that we actually call it augmented intelligence, not artificial intelligence.

Some time ago we realized that this thing called cognitive computing was really bigger than us, it was bigger than IBM, it was bigger than any one vendor in the industry, it was bigger than any of the one or two different solution areas that we were going to be focused on, and we had to open it up, which is when we shifted from focusing on solutions to really dealing with more of a platform of services, where each service really is individually focused on a different part of the problem space.

… what we’re talking about now are a set of services, each of which do something very specific, each of which are trying to deal with a different part of our human experience, and with the idea that anybody building an application, anybody that wants to solve a social or consumer or business problem can do that by taking our services, then composing that into an application.

… If the doctor can now make decisions that are more informed, that are based on real evidence, that are supported by the latest facts in science, that are more tailored and specific to the individual patient, it allows them to actually do their job better. For radiologists, it may allow them to see things in the image that they might otherwise miss or get overwhelmed by. It’s not about replacing them. It’s about helping them do their job better.

That’s really the way to think about this stuff, is that it will have its greatest utility when it is allowing us to do what we do better than we could by ourselves, when the combination of the human and the tool together are greater than either one of them would’ve been by theirselves. That’s really the way we think about it. That’s how we’re evolving the technology. That’s where the economic utility is going to be.

There are lots of things that we as human beings are good at. There’s also a lot of things that we’re not very good, and that’s I think where cognitive computing really starts to make a huge difference, is when it’s able to bridge that distance to make up that gap

A way I like to say it is it doesn’t do our thinking for us, it does our research for us so we can do our thinking better, and that’s true of us as end users and it’s true of advisors.

Source: PCMag

We should not talk about jobs being lost but people suffering #AI

How can humans stay ahead of an ever growing machine intelligence? “I think the challenge for us is to always be creative,” says former world chess champion Garry Kasparov

How can humans stay ahead of an ever growing machine intelligence? “I think the challenge for us is to always be creative,” says former world chess champion Garry Kasparov

He also discussed the threat that increasingly capable AI poses to (human) jobs, arguing that we should not try to predict what will happen in the future but rather look at immediate problems.

“We make predictions and most are wrong because we’re trying to base it on our past experience,” he argued. “I think the problem is not that AI is exceeding our level. It’s another cycle.

Machines have been constantly replacing all sorts of jobs… We should not talk about jobs being lost but people suffering.

“AI is just another challenge. The difference is that now intelligence machines are coming after people with a college degree or with social media and Twitter accounts,” he added.

Source: Tech Crunch

80% of what human physicians currently do will soon be done instead by technology, allowing physicians to

Data-driven AI technologies are well suited to address chronic inefficiencies in health markets, potentially lowering costs by hundreds of billions of dollars, while simultaneously reducing the time burden on physicians.

Data-driven AI technologies are well suited to address chronic inefficiencies in health markets, potentially lowering costs by hundreds of billions of dollars, while simultaneously reducing the time burden on physicians.

These technologies can be leveraged to capture the massive volume of data that describes a patient’s past and present state, project potential future states, analyze that data in real time, assist in reasoning about the best way to achieve patient and physician goals, and provide both patient and physician constant real-time support. Only AI can fulfill such a mission. There is no other solution.

Technologist and investor Vinod Khosla posited that 80 percent of what human physicians currently do will soon be done instead by technology, allowing physicians to focus their time on the really important elements of patient physician interaction.

Within five years, the healthcare sector has the potential to undergo a complete metamorphosis courtesy of breakthrough AI technologies. Here are just a few examples:

1. Physicians will practice with AI virtual assistants (using, for example, software tools similar to Apple’s Siri, but specialized to the specific healthcare application).

2. Physicians with AI virtual assistants will be able to treat 5X – 10X as many patients with chronic illnesses as they do today, with better outcomes than in the past.

Patients will have a constant “friend” providing a digital health conscience to advise, support, and even encourage them to make healthy choices and pursue a healthy lifestyle.

3. AI virtual assistants will support both patients and healthy individuals in health maintenance with ongoing and real-time intelligent advice.

Our greatest opportunity for AI-enhancement in the sector is keeping people healthy, rather than waiting to treat them when they are sick. AI virtual assistants will be able to acquire deep knowledge of diet, exercise, medications, emotional and mental state, and more.

4. Medical devices previously only available in hospitals will be available in the home, enabling much more precise and timely monitoring and leading to a healthier population.

5. Affordable new tools for diagnosis and treatment of illnesses will emerge based on data collected from extant and widely adopted digital devices such as smartphones.

6. Robotics and in-home AI systems will assist patients with independent living.

But don’t be misled — the best metaphor is that they are learning like humans learn and that they are in their infancy, just starting to crawl. Healthcare AI virtual assistants will soon be able to walk, and then run.

Many of today’s familiar AI engines, personified in Siri, Cortana, Alexa, Google Assistant or any of the hundreds of “intelligent chatbots,” are still immature and their capabilities are highly limited. Within the next few years they will be conversational, they will learn from the user, they will maintain context, and they will provide proactive assistance, just to name a few of their emerging capabilities.

And with these capabilities applied in the health sector, they will enable us to keep millions of citizens healthier, give physicians the support and time they need to practice, and save trillions of dollars in healthcare costs. Welcome to the age of AI.

Source: Venture Beat

A blueprint for coexistence with #AI

In September 2013, I was diagnosed with fourth-stage lymphoma.

In September 2013, I was diagnosed with fourth-stage lymphoma.

This near-death experience has not only changed my life and priorities, but also altered my view of artificial intelligence—the field that captured my selfish attention for all those years.

This personal reformation gave me an enlightened view of what AI should mean for humanity. Many of the recent discussions about AI have concluded that this scientific advance will likely take over the world, dominate humans, and end poorly for mankind.

But my near-death experience has enabled me to envision an alternate ending to the AI story—one that makes the most of this amazing technology while empowering humans not just to survive, but to thrive.

Love is what is missing from machines. That’s why we must pair up with them, to leaven their powers with what only we humans can provide. Your future AI diagnostic tool may well be 10 times more accurate than human doctors, but patients will not want a cold pronouncement from the tool: “You have fourth stage lymphoma and a 70 percent likelihood of dying within five years.” That in itself would be harmful.

Patients would benefit, in health and heart, from a “doctor of love” who will spend as much time as the patient needs, always be available to discuss their case, and who will even visit the patients at home. This doctor might encourage us by sharing stories such as, “Kai-Fu had the same lymphoma, and he survived, so you can too.”

This kind of “doctor of love” would not only make us feel better and give us greater confidence, but would also trigger a placebo effect that would increase our likelihood of recuperation. Meanwhile, the AI tool would watch the Q&A between the “doctor of love” and the patient carefully, and then optimize the treatment. If scaled across the world, the number of “doctors of love” would greatly outnumber today’s doctors.

Let us choose to let machines be machines, and let humans be humans. Let us choose to use our machines, and love one another.

Kai-Fu Lee, Ph.D., is the Founder and CEO of Sinovation Ventures and the president of its Artificial Intelligence Institute.

Source: Wired

Will there be any jobs left as #AI advances?

A new report from the International Bar Association suggests machines will most likely replace humans in high-routine occupations.

A new report from the International Bar Association suggests machines will most likely replace humans in high-routine occupations.

The authors have suggested that governments introduce human quotas in some sectors in order to protect jobs.

We thought it’d just be an insight into the world of automation and blue collar sector. This topic has picked up speed tremendously and you can see it everywhere and read it every day. It’s a hot topic now.” – Gerlind Wisskirchen, a lawyer who coordinated the study

For business futurist Morris Miselowski, job shortages will be a reality in the future.

I’m not absolutely convinced we will have enough work for everybody on this planet within 30 years anyway. I’m not convinced that work as we understand it, this nine-to-five, Monday to Friday, is sustainable for many of us for the next couple of decades.”

“Even though automation begun 30 years ago in the blue-collar sector, the new development of artificial intelligence and robotics affects not just blue collar, but the white-collar sector,” Ms Wisskirchen. “You can see that when you see jobs that will be replaced by algorithms or robots depending on the sector.”

The report has recommended some methods to mitigate human job losses, including a type of ‘human quota’ in any sector, introducing ‘made by humans’ label or a tax for the use of machines.

But for Professor Miselowski, setting up human and computer ratios in the workplace would be impractical.

We want to maintain human employment for as long as possible, but I don’t see it as practical or pragmatic in the long-term,” he said. “I prefer what I call a trans-humanist world, where what we do is we learn to work alongside machines the same way we have with computers and calculators.

“It’s just something that is going to happen, or has already started to happen. And we need to make the best out of it, but we need to think ahead and be very thoughtful in how we shape society in the future — and that’s I think a challenge for everybody.” Ms Wisskirchen.

Source: ABC News

We’re so unprepared for the robot apocalypse

Industrial robots alone have eliminated up to 670,000 American jobs between 1990 and 2007

Industrial robots alone have eliminated up to 670,000 American jobs between 1990 and 2007

It seems that after a factory sheds workers, that economic pain reverberates, triggering further unemployment at, say, the grocery store or the neighborhood car dealership.

In a way, this is surprising. Economists understand that automation has costs, but they have largely emphasized the benefits: Machines makes things cheaper, and they free up workers to do other jobs.

The latest study reveals that for manufacturing workers, the process of adjusting to technological change has been much slower and more painful than most experts thought.

every industrial robot eliminated about three manufacturing positions, plus three more jobs from around town

“We were looking at a span of 20 years, so in that timeframe, you would expect that manufacturing workers would be able to find other employment,” Restrepo said. Instead, not only did the factory jobs vanish, but other local jobs disappeared too.

This evidence draws attention to the losers — the dislocated factory workers who just can’t bounce back

one robot in the workforce led to the loss of 6.2 jobs within a commuting zone where local people travel to work.

The robots also reduce wages, with one robot per thousand workers leading to a wage decline of between 0.25 % and 0.5 % Fortune

Perhaps that much was obvious. After all, anecdotes about the Rust Belt abound. But the new findings bolster the conclusion that these economic dislocations are not brief setbacks, but can hurt areas for an entire generation.

How do we even know that automation is a big part of the story at all? A key bit of evidence is that, despite the massive layoffs, American manufacturers are making more stuff than ever. Factories have become vastly more productive.

some consultants believe that the number of industrial robots will quadruple in the next decade, which could mean millions more displaced manufacturing workers

The question, now, is what to do if the period of “maladjustment” that lasts decades, or possibly a lifetime, as the latest evidence suggests.

automation amplified opportunities for people with advanced skills and talents

Source: The Washington Post

AI to become main way banks interact with customers within three years

Four in five bankers believe AI will “revolutionise” the way in which banks gather information as well as how they interact with their clients, said the Accenture Banking Technology Vision 2017 report

Four in five bankers believe AI will “revolutionise” the way in which banks gather information as well as how they interact with their clients, said the Accenture Banking Technology Vision 2017 report

More than three quarters of respondents to the survey believed that AI would enable more simple user interfaces, which would help banks create a more human-like customer experience.

“(It) will give people the impression that the bank knows them a lot better, and in many ways it will take banking back to the feeling that people had when there were more human interactions.”

“The big paradox here is that people think technology will lead to banking becoming more and more automated and less and less personalized, but what we’ve seen coming through here is the view that technology will actually help banking become a lot more personalized,” said Alan McIntyre, head of the Accenture’s banking practice and co-author of the report.

The top reason for using AI for user interfaces, cited by 60 percent of the bankers surveyed, was “to gain data analysis and insights”.

Source: KFGO

These chatbots may one day even replace your doctor

As artificial intelligence programs learn to better communicate with humans, they’ll soon encroach on careers once considered untouchable, like law and accounting.

As artificial intelligence programs learn to better communicate with humans, they’ll soon encroach on careers once considered untouchable, like law and accounting.

These chatbots may one day even replace your doctor.

This January, the United Kingdom’s National Health Service launched a trial with Babylon Health, a startup developing an AI chatbot.

The bot’s goal is the same as the helpline, only without humans: to avoid unnecessary doctor appointments and help patients with over-the-counter remedies.

Using the system, patients chat with the bot about their symptoms, and the app determines whether they should see a doctor, go to a pharmacy, or stay home. It’s now available to about 1.2 million Londoners.

But the upcoming version of Babylon’s chatbot can do even more: In tests, it’s now dianosing patients faster human doctors can, says Dr. Ali Parsa, the company’s CEO. The technology can accurately diagnose about 80 percent of illnesses commonly seen by primary care doctors.

But the upcoming version of Babylon’s chatbot can do even more: In tests, it’s now dianosing patients faster human doctors can, says Dr. Ali Parsa, the company’s CEO. The technology can accurately diagnose about 80 percent of illnesses commonly seen by primary care doctors.

The reason these chatbots are increasingly important is cost: two-thirds of money moving through the U.K.’s health system goes to salaries.

“Human beings are very expensive,” Parsa says. “If we want to make healthcare affordable and accessible for everyone, we’ll need to attack the root causes.”

Globally, there are 5 million fewer doctors today than needed, so anything that lets a doctor do their jobs faster and more easily will be welcome, Parsa says.

Half the world’s population has little access to health care — but they have smartphones. Chatbots could get them the help they need.

Source: NBC News

Tech Reckons With the Problems It Helped Create

SXSW’s – this year, the conference itself feels a lot like a hangover.

It’s as if the coastal elites who attend each year finally woke up with a serious case of the Sunday scaries, realizing that the many apps, platforms, and doodads SXSW has launched and glorified over the years haven’t really made the world a better place. In fact, they’ve often come with wildly destructive and dangerous side effects. Sure, it all seemed like a good idea in 2013!

But now the party’s over. It’s time for the regret-filled cleanup.

speakers related how the very platforms that were meant to promote a marketplace of ideas online have become filthy junkyards of harassment and disinformation.

Yasmin Green, who leads an incubator within Alphabet called Jigsaw, focused her remarks on the rise of fake news, and even brought two propaganda publishers with her on stage to explain how, and why, they do what they do. For Jestin Coler, founder of the phony Denver Guardian, it was an all too easy way to turn a profit during the election.

“To be honest, my mortgage was due,” Coler said of what inspired him to write a bogus article claiming an FBI agent related to Hillary Clinton’s email investigation was found dead in a murder-suicide. That post was shared some 500,000 times just days before the election.

While prior years’ panels may have optimistically offered up more tech as the answer to what ails tech, this year was decidedly short on solutions.

There seemed to be, throughout the conference, a keen awareness of the limits human beings ought to place on the software that is very much eating the world.

Source: Wired

Technology is the main driver of the recent increases in inequality

Artificial Intelligence And Income Inequality

Artificial Intelligence And Income Inequality

While economists debate the extent to which technology plays a role in global inequality, most agree that tech advances have exacerbated the problem.

Economist Erik Brynjolfsson said,

“My reading of the data is that technology is the main driver of the recent increases in inequality. It’s the biggest factor.”

AI expert Yoshua Bengio suggests that equality and ensuring a shared benefit from AI could be pivotal in the development of safe artificial intelligence. Bengio, a professor at the University of Montreal, explains, “In a society where there’s a lot of violence, a lot of inequality, [then] the risk of misusing AI or having people use it irresponsibly in general is much greater. Making AI beneficial for all is very central to the safety question.”

“It’s almost a moral principle that we should share benefits among more people in society,” argued Bart Selman, a professor at Cornell University … “So we have to go into a mode where we are first educating the people about what’s causing this inequality and acknowledging that technology is part of that cost, and then society has to decide how to proceed.”

Source: HuffPost

Is Your Doctor Stumped? There’s a Chatbot for That

Doctors have created a chatbot to revolutionize communication within hospitals using artificial intelligence … basically a cyber-radiologist in app form, can quickly and accurately provide specialized information to non-radiologists. And, like all good A.I., it’s constantly learning.

Doctors have created a chatbot to revolutionize communication within hospitals using artificial intelligence … basically a cyber-radiologist in app form, can quickly and accurately provide specialized information to non-radiologists. And, like all good A.I., it’s constantly learning.

Traditionally, interdepartmental communication in hospitals is a hassle. A clinician’s assistant or nurse practitioner with a radiology question would need to get a specialist on the phone, which can take time and risks miscommunication. But using the app, non-radiologists can plug in common technical questions and receive an accurate response instantly.

“Say a patient has a creatinine [lab test to see how well the kidneys are working]” co-author and application programmer Kevin Seals tells Inverse. “You send a message, like you’re texting with a human radiologist. ‘My patient is a 5.6, can they get a CT scan with contrast?’ A lot of this is pretty routine questions that are easily automated with software, but there’s no good tool for doing that now.”

In about a month, the team plans to make the chatbot available to everyone at UCLA’s Ronald Reagan Medical Center, see how that plays out, and scale up from there. Your doctor may never be stumped again.

Source: Inverse

The last things that will make us uniquely human

What will be my grandson’s place in a world where machines trounce us in one area after another?

Some are worried that self-driving cars and trucks may displace millions of professional drivers (they are right), and disrupt entire industries (yup!). But I worry about my six-year-old son. What will his place be in a world where machines trounce us in one area after another? What will he do, and how will he relate to these ever-smarter machines? What will be his and his human peers’ contribution to the world he’ll live in?

He’ll never calculate faster, or solve a math equation quicker. He’ll never type faster, never drive better, or even fly more safely. He may continue to play chess with his friends, but because he’s a human he will no longer stand a chance to ever become the best chess player on the planet. He might still enjoy speaking multiple languages (as he does now), but in his professional life that may not be a competitive advantage anymore, given recent improvements in real-time machine translation.

So perhaps we might want to consider qualities at a different end of the spectrum: radical creativity, irrational originality, even a dose of plain illogical craziness, instead of hard-nosed logic. A bit of Kirk instead of Spock.

Actually, it all comes down to a fairly simple question: What’s so special about us, and what’s our lasting value? It can’t be skills like arithmetic or typing, which machines already excel in. Nor can it be rationality, because with all our biases and emotions we humans are lacking.

So far, machines have a pretty hard time emulating these qualities: the crazy leaps of faith, arbitrary enough to not be predicted by a bot, and yet more than simple randomness. Their struggle is our opportunity.

So we must aim our human contribution to this division of labour to complement the rationality of the machines, rather than to compete with it. Because that will sustainably differentiate us from them, and it is differentiation that creates value.

Source: BBC Viktor Mayer-Schonberger is Professor of Internet Governance and Regulation at the Oxford Internet Institute, University of Oxford.

Burger-flipping robot could spell the end of teen employment

The AI-driven robot ‘Flippy,’ by Miso Robotics, is marketed as a kitchen assistant, rather than a replacement for professionally-trained teens that ponder the meaning of life — or what their crush looks like naked — while awaiting a kitchen timer’s signal that it’s time to flip the meat.

Flippy features a number of different sensors and cameras to identify food objects on the grill. It knows, for example, that burgers and chicken-like patties cook for a different duration. Once done, the machine expertly lifts the burger off the grill and uses its on-board technology to place it gently on a perfectly-browned bun.

The robot doesn’t just work the grill like a master hibachi chef, either. Flippy is capable of deep frying, chopping vegetables, and even plating dishes.

Source: TNW

JPMorgan software does in seconds what took lawyers 360,000 hours

At JPMorgan, a learning machine is parsing financial deals that once kept legal teams busy for thousands of hours.

At JPMorgan, a learning machine is parsing financial deals that once kept legal teams busy for thousands of hours.

The program, called COIN, for Contract Intelligence, does the mind-numbing job of interpreting commercial-loan agreements that, until the project went online in June, consumed 360,000 hours of lawyers’ time annually. The software reviews documents in seconds, is less error-prone and never asks for vacation.

COIN is just the start for the biggest U.S. bank. The firm recently set up technology hubs for teams specialising in big data, robotics and cloud infrastructure to find new sources of revenue, while reducing expenses and risks.

The push to automate mundane tasks and create new tools for bankers and clients — a growing part of the firm’s $9.6 billion technology budget.

Behind the strategy, overseen by Chief Operating Officer Matt Zames and Chief Information Officer Dana Deasy, is an undercurrent of anxiety:

though JPMorgan emerged from the financial crisis as one of few big winners, its dominance is at risk unless it aggressively pursues new technologies, according to interviews with a half-dozen bank executives.

Source: Independent

Teaching an Algorithm to Understand Right and Wrong

Aristotle states that it is a fact that “all knowledge and every pursuit aims at some good,” but then continues, “What then do we mean by the good?” That, in essence, encapsulates the ethical dilemma.

We all agree that we should be good and just, but it’s much harder to decide what that entails.

“We need to decide to what extent the legal principles that we use to regulate humans can be used for machines. There is a great potential for machines to alert us to bias. We need to not only train our algorithms but also be open to the possibility that they can teach us about ourselves.” – Francesca Rossi, an AI researcher at IBM

Since Aristotle’s time, the questions he raised have been continually discussed and debated.

Today, as we enter a “cognitive era” of thinking machines, the problem of what should guide our actions is gaining newfound importance. If we find it so difficult to denote the principles by which a person should act justly and wisely, then how are we to encode them within the artificial intelligences we are creating? It is a question that we need to come up with answers for soon.

Cultural Norms vs. Moral Values

Another issue that we will have to contend with is that we will have to decide not only what ethical principles to encode in artificial intelligences but also how they are coded. As noted above, for the most part, “Thou shalt not kill” is a strict principle. Other than a few rare cases, such as the Secret Service or a soldier, it’s more like a preference that is greatly affected by context.

What makes one thing a moral value and another a cultural norm? Well, that’s a tough question for even the most-lauded human ethicists, but we will need to code those decisions into our algorithms. In some cases, there will be strict principles; in others, merely preferences based on context. For some tasks, algorithms will need to be coded differently according to what jurisdiction they operate in.

Setting a Higher Standard

Most AI experts I’ve spoken to think that we will need to set higher moral standards for artificial intelligences than we do for humans.

Major industry players, such as Google, IBM, Amazon, and Facebook, recently set up a partnership to create an open platform between leading AI companies and stakeholders in academia, government, and industry to advance understanding and promote best practices. Yet that is merely a starting point.

Source: Harvard Business Review

12 Observations About Artificial Intelligence From The O’Reilly AI Conference

Bloggers: Here’s a few excepts from a long but very informative review. (The best may be last.)

Bloggers: Here’s a few excepts from a long but very informative review. (The best may be last.)

The conference was organized by Ben Lorica and Roger Chen with Peter Norvig and Tim O-Reilly acting as honorary program chairs.

For a machine to act in an intelligent way, said [Yann] LeCun, it needs “to have a copy of the world and its objective function in such a way that it can roll out a sequence of actions and predict their impact on the world.” To do this, machines need to understand how the world works, learn a large amount of background knowledge, perceive the state of the world at any given moment, and be able to reason and plan.

Peter Norvig explained the reasons why machine learning is more difficult than traditional software: “Lack of clear abstraction barriers”—debugging is harder because it’s difficult to isolate a bug; “non-modularity”—if you change anything, you end up changing everything; “nonstationarity”—the need to account for new data; “whose data is this?”—issues around privacy, security, and fairness; lack of adequate tools and processes—exiting ones were developed for traditional software.

AI must consider culture and context—“training shapes learning”

“Many of the current algorithms have already built in them a country and a culture,” said Genevieve Bell, Intel Fellow and Director of Interaction and Experience Research at Intel. As today’s smart machines are (still) created and used only by humans, culture and context are important factors to consider in their development.

Both Rana El Kaliouby (CEO of Affectiva, a startup developing emotion-aware AI) and Aparna Chennapragada (Director of Product Management at Google) stressed the importance of using diverse training data—if you want your smart machine to work everywhere on the planet it must be attuned to cultural norms.

“Training shapes learning—the training data you put in determines what you get out,” said Chennapragada. And it’s not just culture that matters, but also context

The £10 million Leverhulme Centre for the Future of Intelligence will explore “the opportunities and challenges of this potentially epoch-making technological development,” namely AI. According to The Guardian, Stephen Hawking said at the opening of the Centre,

“We spend a great deal of time studying history, which, let’s face it, is mostly the history of stupidity. So it’s a welcome change that people are studying instead the future of intelligence.”

Gary Marcus, professor of psychology and neural science at New York University and cofounder and CEO of Geometric Intelligence,

“a lot of smart people are convinced that deep learning is almost magical—I’m not one of them … A better ladder does not necessarily get you to the moon.”

Tom Davenport added, at the conference: “Deep learning is not profound learning.”

AI changes how we interact with computers—and it needs a dose of empathy

AI continues to be possibly hampered by a futile search for human-level intelligence while locked into a materialist paradigm

Maybe, just maybe, our minds are not computers and computers do not resemble our brains? And maybe, just maybe, if we finally abandon the futile pursuit of replicating “human-level AI” in computers, we will find many additional–albeit “narrow”–applications of computers to enrich and improve our lives?

Gary Marcus complained about research papers presented at the Neural Information Processing Systems (NIPS) conference, saying that they are like alchemy, adding a layer or two to a neural network, “a little fiddle here or there.” Instead, he suggested “a richer base of instruction set of basic computations,” arguing that “it’s time for genuinely new ideas.”

Is it possible that this paradigm—and the driving ambition at its core to play God and develop human-like machines—has led to the infamous “AI Winter”? And that continuing to adhere to it and refusing to consider “genuinely new ideas,” out-of-the-dominant-paradigm ideas, will lead to yet another AI Winter?

Source: Forbes

Artificial Intelligence’s White Guy Problem

Warnings by luminaries like Elon Musk and Nick Bostrom about “the singularity” — when machines become smarter than humans — have attracted millions of dollars and spawned a multitude of conferences.

But this hand-wringing is a distraction from the very real problems with artificial intelligence today, which may already be exacerbating inequality in the workplace, at home and in our legal and judicial systems.

Sexism, racism and other forms of discrimination are being built into the machine-learning algorithms that underlie the technology behind many “intelligent” systems that shape how we are categorized and advertised to.

A very serious example was revealed in an investigation published last month by ProPublica. It found that widely used software that assessed the risk of recidivism in criminals was twice as likely to mistakenly flag black defendants as being at a higher risk of committing future crimes. It was also twice as likely to incorrectly flag white defendants as low risk.

The reason those predictions are so skewed is still unknown, because the company responsible for these algorithms keeps its formulas secret — it’s proprietary information. Judges do rely on machine-driven risk assessments in different ways — some may even discount them entirely — but there is little they can do to understand the logic behind them.

Histories of discrimination can live on in digital platforms, and if they go unquestioned, they become part of the logic of everyday algorithmic systems.

Another scandal emerged recently when it was revealed that Amazon’s same-day delivery service was unavailable for ZIP codes in predominantly black neighborhoods. The areas overlooked were remarkably similar to those affected by mortgage redlining in the mid-20th century. Amazon promised to redress the gaps, but it reminds us how systemic inequality can haunt machine intelligence.

And then there’s gender discrimination. Last July, computer scientists at Carnegie Mellon University found that women were less likely than men to be shown ads on Google for highly paid jobs. The complexity of how search engines show ads to internet users makes it hard to say why this happened — whether the advertisers preferred showing the ads to men, or the outcome was an unintended consequence of the algorithms involved.

Regardless, algorithmic flaws aren’t easily discoverable: How would a woman know to apply for a job she never saw advertised? How might a black community learn that it were being overpoliced by software?

Like all technologies before it, artificial intelligence will reflect the values of its creators.

Source: New York Times – Kate Crawford is a principal researcher at Microsoft and co-chairwoman of a White House symposium on society and A.I.

test

Google teaches robots to learn from each other

Google has a plan to speed up robotic learning, and it involves getting robots to share their experiences – via the cloud – and collectively improve their capabilities – via deep learning.

Google researchers decided to combine two recent technology advances. The first is cloud robotics, a concept that envisions robots sharing data and skills with each other through an online repository. The other is machine learning, and in particular, the application of deep neural networks to let robots learn for themselves.

They got the robots to pool their experiences to “build a common model of the skill” that, as the researches explain, was better and faster than what they could have achieved on their own.

As robots begin to master the art of learning it’s inevitable that one day they’ll be able to acquire new skills instantly at much, much faster rates than humans have ever been able to.

Source: Global Futurist

Google’s AI Plans Are A Privacy Nightmare

Google is betting that people care more about convenience and ease than they do about a seemingly oblique notion of privacy, and it is increasingly correct in that assumption.

Google is betting that people care more about convenience and ease than they do about a seemingly oblique notion of privacy, and it is increasingly correct in that assumption.

Google’s new assistant, which debuted in the company’s new messaging app Allo, works like this: Simply ask the assistant a question about the weather, nearby restaurants, or for directions, and it responds with detailed information right there in the chat interface.

Because Google’s assistant recommends things that are innately personal to you, like where to eat tonight or how to get from point A to B, it is amassing a huge collection of your most personal thoughts, visited places, and preferences … In order for the AI to “learn” this means it will have to collect and analyze as much data about you as possible in order to serve you more accurate recommendations, suggestions, and data.

In order for artificial intelligence to function, your messages have to be unencrypted.

These new assistants are really cool, and the reality is that tons of people will probably use them and enjoy the experience. But at the end of the day, we’re sacrificing the security and privacy of our data so that Google can develop what will eventually become a new revenue stream. Lest we forget: Google and Facebook have a responsibility to investors, and an assistant that offers up a sponsored result when you ask it what to grab for dinner tonight could be a huge moneymaker.

Source: Gizmodo

This Robot-Made Pizza Is Baked in the Van on the Way to Your Door #AI

Co-Bot Environment

“We have what we call a co-bot environment; so humans and robots working collaboratively,” says Zume Pizza Co-Founder Julia Collins. “Robots do everything from dispensing sauce, to spreading sauce, to placing pizzas in the oven.

Each pie is baked in the delivery van, which means “you get something that is pizzeria fresh, hot and sizzling,”

To see Zume’s pizza-making robots in action, check out the video.

Source: Forbes

Sixty-two percent of organizations will be using artificial intelligence (AI) by 2018, says Narrative Science

Artificial intelligence received $974m of funding as of June 2016 and this figure will only rise with the news that 2016 saw more AI patent applications than ever before.

Artificial intelligence received $974m of funding as of June 2016 and this figure will only rise with the news that 2016 saw more AI patent applications than ever before.

This year’s funding is set to surpass 2015’s total and CB Insights suggests that 200 AI-focused companies have raised nearly $1.5 billion in equity funding.

AI isn’t limited to the business sphere, in fact the personal robot market, including ‘care-bots’, could reach $17.4bn by 2020.

Care-bots could prove to be a fantastic solution as the world’s populations see an exponential rise in elderly people. Japan is leading the way with a third of government budget on robots devoted to the elderly.

Source: Raconteur: The rise of artificial intelligence in 6 charts

Why Microsoft bought LinkedIn, in one word: Cortana

Know everything about your business contact before you even walk into the room.

J eff Weiner, the chief executive of LinkedIn, said that his company envisions a so-called “Economic Graph,” a digital representation of every employee and their resume, a digital record of every job that’s available, as well as every job and even every digital skill necessary to win those jobs.

eff Weiner, the chief executive of LinkedIn, said that his company envisions a so-called “Economic Graph,” a digital representation of every employee and their resume, a digital record of every job that’s available, as well as every job and even every digital skill necessary to win those jobs.

LinkedIn also owns Lynda.com, a training network where you can take classes to learn those skills. And, of course, there’s the LinkedIn news feed, where you can keep tabs on your coworkers from a social perspective, as well.

Buying LinkedIn brings those two graphs together and gives Microsoft more data to feed into its machine learning and business intelligence processes. “If you connect these two graphs, this is where the magic happens, where digital work is concerned,” Microsoft chief executive Satya Nadella said during a conference call.

Source: PC World

4th revolution challenges our ideas of being human

Professor Klaus Schwab, Founder and Executive Chairman of the World Economic Forum is convinced that we are at the beginning of a revolution that is fundamentally changing the way we live, work and relate to one another

Some call it the fourth industrial revolution, or industry 4.0, but whatever you call it, it represents the combination of cyber-physical systems, the Internet of Things, and the Internet of Systems.

Professor Klaus Schwab, Founder and Executive Chairman of the World Economic Forum, has published a book entitled The Fourth Industrial Revolution in which he describes how this fourth revolution is fundamentally different from the previous three, which were characterized mainly by advances in technology.

In this fourth revolution, we are facing a range of new technologies that combine the physical, digital and biological worlds. These new technologies will impact all disciplines, economies and industries, and even challenge our ideas about what it means to be human.

It seems a safe bet to say, then, that our current political, business, and social structures may not be ready or capable of absorbing all the changes a fourth industrial revolution would bring, and that major changes to the very structure of our society may be inevitable.

Schwab said, “The changes are so profound that, from the perspective of human history, there has never been a time of greater promise or potential peril. My concern, however, is that decision makers are too often caught in traditional, linear (and non-disruptive) thinking or too absorbed by immediate concerns to think strategically about the forces of disruption and innovation shaping our future.”

Schwab calls for leaders and citizens to “together shape a future that works for all by putting people first, empowering them and constantly reminding ourselves that all of these new technologies are first and foremost tools made by people for people.”

Source: Forbes, World Economic Forum

Will human therapists go the way of the Dodo?

An increasing number of patients are using technology for a quick fix. Photographed by Mikael Jansson, Vogue, March 2016

PL – So, here’s an informative piece on a person’s experience using an on-demand interactive video therapist, as compared to her human therapist. In Vogue Magazine, no less. A sign this is quickly becoming trendy. But is it effective?

In the first paragraph, the author of the article identifies the limitations of her digital therapist:

“I wish I could ask (she eventually named her digital therapist Raph) to consider making an exception, but he and I aren’t in the habit of discussing my problems”

But the author also recognizes the unique value of the digital therapist as she reflects on past sessions with her human therapist:

“I saw an in-the-flesh therapist last year. Alice. She had a spot-on sense for when to probe and when to pass the tissues. I adored her. But I am perennially juggling numerous assignments, and committing to a regular weekly appointment is nearly impossible.”

Later on, when the author was faced with another crisis, she returned to her human therapist and this was her observation of that experience:

“she doesn’t offer advice or strategies so much as sympathy and support—comforting but short-lived. By evening I’m as worried as ever.”

On the other hand, this is her view of her digital therapist:

“Raph had actually come to the rescue in unexpected ways. His pragmatic MO is better suited to how I live now—protective of my time, enmeshed with technology. A few months after I first “met” Raph, my anxiety has significantly dropped”

This, of course, was a story written by a successful educated woman, working with an interactive video, who had experiences with a human therapist to draw upon for reference.

What about the effectiveness of a digital therapist for a more diverse population with social, economic and cultural differences?

It has already been shown that, done right, this kind of tech has great potential. In fact, as a more affordable option, it may do the most good for the wider population.

The ultimate goal for tech designers should be to create a more personalized experience. Instant and intimate. Tech that gets to know the person and their situation, individually. Available any time. Tech that can access additional electronic resources for the person in real-time, such as the above mentioned interactive video.

But first, tech designers must address a core problem with mindset. They code for a rational world while therapists deal with irrational human beings. As a group, they believe they are working to create an omniscient intelligence that does not need to interact with the human to know the human. They believe it can do this by reading the human’s emails, watching their searches, where they go, what they buy, who they connect with, what they share, etc. As if that’s all humans are about. As if they can be statistically profiled and treated to predetermined multi-stepped programs.

This is an incompatible approach for humans and the human experience. Tech is a reflection of the perceptions of its coders. And coders, like doctors, have their limitations.

In her recent book, Just Medicine, Dayna Bowen Matthew highlights research that shows 83,570 minorities die each year from implicit bias from well-meaning doctors. This should be a cautionary warning. Digital therapists could soon have a reach and impact that far exceeds well-trained human doctors and therapists. A poor foundational design for AI could have devastating consequences for humans.

A wildcard was recently introduced with Google’s AlphaGo, an artificial intelligence that plays the board game Go. In a historic Go match between Lee Sedol, one of the world’s top players, AlphaGo won the match four out of five games. This was a surprising development. Many thought this level of achievement was 10 years out.

The point: Artificial intelligence is progressing at an extraordinary pace, unexpected by most all the experts. It’s too exciting, too easy, too convenient. To say nothing of its potential to be “free,” when tech giants fully grasp the unparalleled personal data they can collect. The Jeanie (or Joker) is out of the bottle. And digital coaches are emerging. Capable of drawing upon and sorting vast amounts of digital data.

Meanwhile, the medical and behavioral fields are going too slow. Way too slow.

They are losing ground (most likely have already lost) control of their future by vainly believing that a cache of PhDs, research and accreditations, CBT and other treatment protocols, government regulations and HIPPA, is beyond the challenge and reach of tech giants. Soon, very soon, therapists that deal in non-critical non-crisis issues could be bypassed when someone like Apple hangs up its ‘coaching’ shingle: “Siri is In.”

The most important breakthrough of all will be the seamless integration of a digital coach with human therapists, accessible upon immediate request, in collaborative and complementary roles.

This combined effort could vastly extend the reach and impact of all therapies for the sake of all human beings.

Source: Vogue

Obama – robots taking over jobs that pay less than $20 an hour

Buried deep in President Obama’s February economic report to Congress was a rather grave section on the future of robotics in the workforce.

Buried deep in President Obama’s February economic report to Congress was a rather grave section on the future of robotics in the workforce.

After much back and forth on the ways robots have eliminated or displaced workers in the past, the report introduced a critical study conducted this year by the White House’s Council of Economic Advisers (CEA).

The study examined the chances automation could threaten people’s jobs based on how much money they make: either less than $20 an hour, between $20 and $40 an hour, or more than $40.

The results showed a 0.83 median probability of automation replacing the lowest-paid workers — those manning the deep fryers, call centers, and supermarket cash registers — while the other two wage classes had 0.31 and 0.04 chances of getting automated, respectively.

In other words, 62% of American jobs may be at risk.

Source: TechInsider

Source: TechInsider

Meanwhile – from Alphabet (Google) chairman Eric Schmidt

Meanwhile – from Alphabet (Google) chairman Eric Schmidt

“There’s no question that as [AI] becomes more pervasive, people doing routine, repetitive tasks will be at risk,” Schmidt says.

“I understand the economic arguments, but this technology benefits everyone on the planet, from the rich to the poor, the educated to uneducated, high IQ to low IQ, every conceivable human being. It genuinely makes us all smarter, so this is a natural next step.”

Source: Financial Review

AI will free humans to do other things

The ability of AI to automate much of what we do, and its potential to destroy humanity, are two very different things. But according to Martin Ford, author of Rise of the Robots: Technology and the Threat of a Jobless Future, they’re often conflated. It’s fine to think about the far-future implications of AI, but only if it doesn’t distract us from the issues we’re likely to face over the next few decades. Chief among them is mass automation.

The ability of AI to automate much of what we do, and its potential to destroy humanity, are two very different things. But according to Martin Ford, author of Rise of the Robots: Technology and the Threat of a Jobless Future, they’re often conflated. It’s fine to think about the far-future implications of AI, but only if it doesn’t distract us from the issues we’re likely to face over the next few decades. Chief among them is mass automation.

There’s no question that artificial intelligence is poised to uproot and replace many existing jobs, from factory work to the upper echelons o white collar work. Some experts predict that half of all jobs in the US are vulnerable to automation in the near future.

But that doesn’t mean we won’t be able to deal with the disruption. A strong case can be made that offloading much of our work, both physical and mental, is a laudable, quasi-utopian goal for our species.

In all likelihood, artificial intelligence will produce new ways of creating wealth, while freeing humans to do other things. And advances in AI will be accompanied by advances in other areas, especially manufacturing. In the future, it will become easier, and not harder, to meet our basic needs.

Source: Gizmodo

Artificial Intelligence: Toward a technology-powered, human-led AI revolution

Research conducted among 9,000 young people between the ages of 16 and 25 in nine industrialised and developing markets – Australia, Brazil, China, France, Germany, Great Britain, India, South Africa and the United States – showed that a striking 40 per cent think that a machine – some kind of artificial intelligence – will be able to fully do their job in the next decade.

Research conducted among 9,000 young people between the ages of 16 and 25 in nine industrialised and developing markets – Australia, Brazil, China, France, Germany, Great Britain, India, South Africa and the United States – showed that a striking 40 per cent think that a machine – some kind of artificial intelligence – will be able to fully do their job in the next decade.

Young people today are keenly aware that the impact of technology will be central to the way their careers and lives will progress and differ from those of previous generations.

In its “Top strategic predictions for 2016 and beyond,” Gartner expects that by 2018, 20 per cent of all business content will be authored by machines and 50 per cent of the fastest-growing companies will have fewer employees than instances of smart machines. This is AI in action. Automated systems can have measurable, positive impacts on both our environment and our social responsibilities, giving us the room to explore, research and create new techniques to further enrich our lives. It is a radical revolution in our time.

The message from the next generation seems to be “take us on the journey.” But it is one which technology leaders need to lead. That means ensuring that as we use technology to remove the mundane, we also use it to amplify the creativity and inquisitive nature only humans are capable of. We need the journey of AI to be a human- led journey.

Provocative short video of a potential near future with AI

“The biggest enigma of the post-work society is what happens to the self when it cannot define itself against corporate identity, skill set or seniority. We’ll see.”

Source: The Guardian

Inside the surprisingly sexist world of artificial intelligence

Right now, the real danger in the world of artificial intelligence isn’t the threat of robot overlords — it’s a startling lack of diversity.

Right now, the real danger in the world of artificial intelligence isn’t the threat of robot overlords — it’s a startling lack of diversity.

There’s no doubt Stephen Hawking is a smart guy. But the world-famous theoretical physicist recently declared that women leave him stumped.

“Women should remain a mystery,” Hawking wrote in response to a Reddit user’s question about the realm of the unknown that intrigued him most. While Hawking’s remark was meant to be light-hearted, he sounded quite serious discussing the potential dangers of artificial intelligence during Reddit’s online Q&A session:

The real risk with AI isn’t malice but competence. A superintelligent AI will be extremely good at accomplishing its goals, and if those goals aren’t aligned with ours, we’re in trouble.

Hawking’s comments might seem unrelated. But according to some women at the forefront of computer science, together they point to an unsettling truth. Right now, the real danger in the world of artificial intelligence isn’t the threat of robot overlords—it’s a startling lack of diversity.

I spoke with a few current and emerging female leaders in robotics and artificial intelligence about how a preponderance of white men have shaped the fields—and what schools can do to get more women and minorities involved. Here’s what I learned:

- Hawking’s offhand remark about women is indicative of the gender stereotypes that continue to flourish in science.

- Fewer women are pursuing careers in artificial intelligence because the field tends to de-emphasize humanistic goals.

- There may be a link between the homogeneity of AI researchers and public fears about scientists who lose control of superintelligent machines.

- To close the diversity gap, schools need to emphasize the humanistic applications of artificial intelligence.

- A number of women scientists are already advancing the range of applications for robotics and artificial intelligence.

- Robotics and artificial intelligence don’t just need more women—they need more diversity across the board.

In general, many women are driven by the desire to do work that benefits their communities, desJardins says. Men tend to be more interested in questions about algorithms and mathematical properties.

Since men have come to dominate AI, she says, “research has become very narrowly focused on solving technical problems and not the big questions.”

Source: Quartz

Top 10 jobs that are likely to be replaced by robots

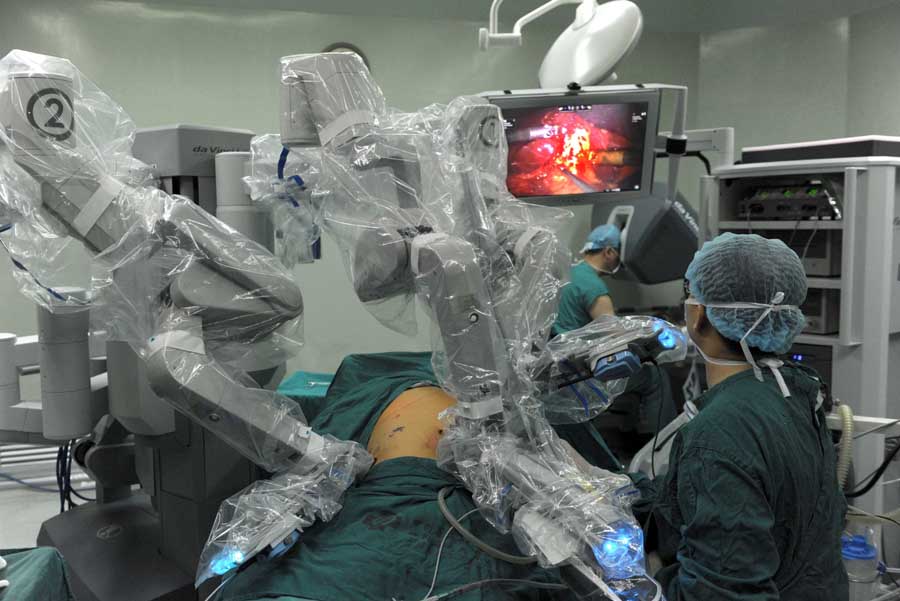

A hospital in Sichuan province uses an imported robotic surgical system to remove a patient’s gallbladder for the first time. Photo provided to China Daily

Since the notion of artificial intelligence became a hot topic around the world, experts started to list the jobs that are most likely to be replaced by robots.

It is no surprise to find that highly repetitive jobs are on the list, but some are beyond imagination to most of us-at the moment.

[PL – You might suspect that factory workers are on the list at number 1, which they are, but check out others on this list that are surprising]:

5. Lawyers

There is already a robot that can replace part of a lawyer’s job. In 2011, Blackstone Discovery from the United States started to provide a document analysis service to its clients. The artificial intelligence is capable of analyzing 1.5 million documents within several days. The cost of using such AI is less than one tenth of hiring a real lawyer. And lawyers make mistakes, robots don’t.

7. Journalists

Associated Press started to use artificial intelligence software to write financial statement reports in July. The software can save 90 percent of writing time so AP can guarantee an immediate release of these reports. AP also uses software to analyze sports rankings and game results.

8. Surgeons

Robots first carried out surgery in 1993. In 2010, China approved the use of the da Vinci surgical system for operating theatres.

By the end of last year, a total of 3,079 da Vinci robots were operating around the world. China has 28 of them.

On Dec 8, Zhejiang People’s Hospital used the da Vinci robot to remove a tumor from a patient from Mali. The robot has four arms andone endoscope system that can move 360 degrees inside a patient’s body.

The robot was able to remove all of the malignant tissue around the tumor without destroying healthy tissue.

9. Disaster Relief Workers

China has already developed a type of robot that can work in fire, water or even after a nuclear explosion. The robot, developed by Shanghai Jiaotong University, is set to be widely used in rescue work.

10. Nurses

Siasun Robot & Automation Co in Shenyang, Liaoning province, has developed a nursing machine that can tell jokes, play music, canbe depended on to deliver food to a patient punctually, and will do all that is required if there is an emergency.

In the United States, experts are developing a robot that can replace humans to attend Ebola patients so that humans can avoid beinginfected by the virus.

Source: ChinaDaily